Discover the transformative power of Generative AI (GenAI) in organizations. This comprehensive guide explores integration strategies, use cases, and risk mitigation, offering insights to unlock the full potential of AI technology for improved productivity and business operations.

Transforming Organizations With Generative AI

Businesses are under immense pressure to adapt early to emerging technologies. This year, Generative Artificial Intelligence (GenAI) has opened up a plethora of opportunities for everyone, upending markets to the same extent the Internet or computers did a couple of decades ago.

However, the myriad of AI options available can often leave businesses at a loss. The key to successful integration of GenAI is to assess your current operations and identify areas where automation and streamlining can provide quick wins.

In this article, we will guide you through the process of assessing your needs and AI use cases, understanding your industry-specific challenges better and providing a roadmap for implementation.

Asking the Right Questions

The first step in integrating GenAI is to assess your business needs, infrastructure, possible AI use cases, data privacy requirements and data sources. You need to decide whether you want to deploy your AI solution locally or on the cloud. You also have to choose between open-source solutions and provider APIs.

If you’re not equipped with a robust in-house IT and data infrastructure and a network that can handle enormous amounts of traffic, you might consider cloud solutions and service providers. If data scientists, engineers, analysts and IT professionals are also not abundant in your organization thenagain, outsourcing will be needed. AI and Data Science consultancies can help figure all that out.

But what are some misconceptions that more often than not require clearing up once you reach out to one of these consultants?

Many businesses consider fine-tuning or re-training large language models (LLMs) like ChatGPT. As explained by our Senior Data Scientist in his latest article, there are a few reasons why this might not be the best approach:

- Data scarcity: LLMs like ChatGPT or GPT-4 have been trained on hundreds of billions of tokens. Most organizations do not have access to this amount of data, making it difficult to train their own models from scratch.

- Misconceptions about fine-tuning: Fine-tuning these models is not as straightforward as it seems. It requires creating at least 10k prompt-completion pairs, which is a time-consuming and resource-intensive process.

- Model obsolescence: Even if you manage to fine-tune your model, it will get outdated as more knowledge/documents arrive at your company. This means you will have to frequently repeat the fine-tuning process.

Instead of trying to fine-tune these models, a more efficient approach is to use Retrieval Augmented Generation (RAG).

How Does This Work?

RAG resolves the problem by turning the tables. Instead of incorporating all your data into LLMs, an AI-augmented search function will first identify the relevant parts from your sources and only send those to the Language Model.

The process involves storing your chunked, indexed documents for quick similarity calculation, selecting the most relevant documents upon runtime, and feeding these parts to your LLM with appropriate instructions. By following these steps, you can effectively communicate with your organization's internal knowledge base.

Still, several considerations need to be made, including context length, document preprocessing, handling different extensions, and the validation of these models' completions. Most likely, you’ll need Data Scientists (the real AI experts) for a successful integration.

Expect the Unexpected

AI use cases vary across industries, and so do the associated risks.

For example, in Healthcare AI can revolutionize patient care and claims processing, and even help with the image-based diagnosis of X-ray or CT scans. But risks like privacy breaches and regulatory non-compliance must be addressed, and we’re still a long way from unsupervised AI-diagnostics.

Similar cases can be made for data and AI in Banking, Retail, Manufacturing, Telecommunications or Tech as a whole.

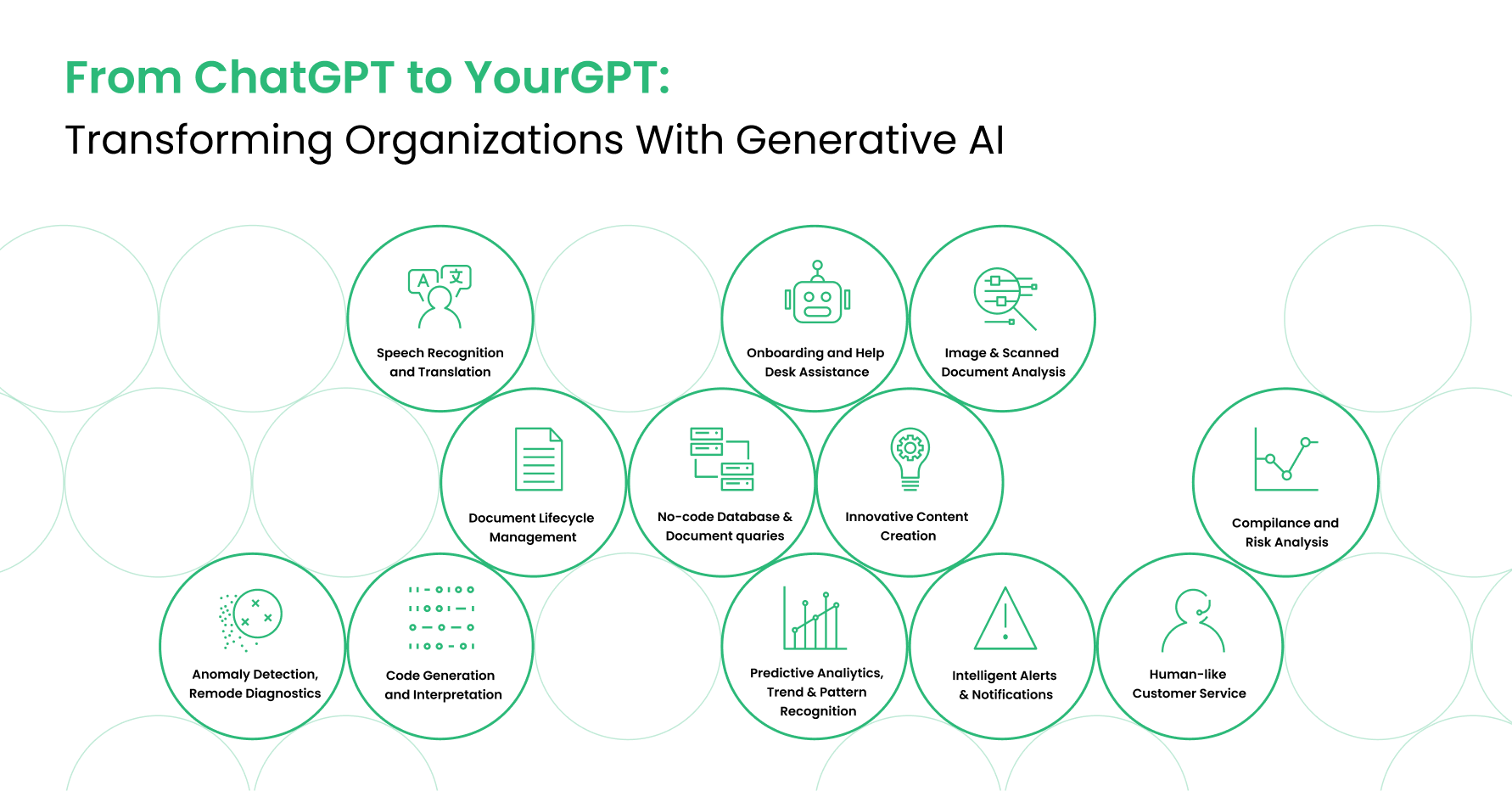

But all these industry-specific use cases are derived from AI technologies that are, in truth, sector-agnostic.

Common use-cases:

- Document Lifecycle Management

- No-code Database & Document queries

- Innovative Content Creation

- Intelligent Alerts & Notifications

- Onboarding and Help Desk Assistance

- Human-Like Customer Service

- Compliance and Risk Analysis

- Hyper-Personalization in Marketing

- Predictive Analytics, Trend & Pattern Recognition

- Anomaly Detection, Remote Diagnostics

- Image & Scanned Document Analysis

- Speech Recognition and Translation

- Code Generation and Interpretation

Every person, organization, government and enterprise must find the best ways to utilize new opportunities, and as such, no two AI experiences will be the exact same.

While AI presents numerous opportunities, it's crucial to consider the associated risks.

These include:

- Potential data breaches

- Misclassification of information

- Generation of off-brand content

- User fatigue

- Non-compliance with regulations

- Over-personalization

- Inaccurate predictions

- False positives in anomaly detection

- Misinterpretation of intent

- Missed nuances

- Questionable quality and security of the generated code

Implementing robust measures to mitigate these risks is paramount to fully harness the benefits of AI.

Microsoft has also recently released an Azure OpenAI Transparency Note, in which it lists recommended use cases — and when not to use AI.

The automation of every digital workflow is here, and AI governance needs to focus on safety, privacy, and catching errors before they do any real damage. So do not leave your AI unattended. Always revise its outputs and make sure that competent human supervision is in place. The machine, once again, is not here to take jobs, but to improve productivity.

Despite industry-specific challenges, the versatility of AI makes it an attractive choice across sectors. In essence, it is industry-agnostic. But you will need to provide effective governance according to the specific applications you’ll use.hat will need rigorous testing, continuous monitoring and strict adherence to carefully set guidelines.

Roadmap for AI Implementation – in 5 Easy Steps

We've broken down the process of AI implementation into five easy steps.

Depending on the complexity of your needs, building a proof of concept and deploying an AI solution can take anywhere from 2 weeks to a couple of months.

1. Audit

Our consultants and AI experts assess your business needs, infrastructure, possible AI use cases, data privacy requirements and data sources. Cloud or local deployment? Open source or API? Based on this analysis, we will provide you with a comprehensive report that outlines the optimal AI solution for your company, including recommendations for cloud or local deployment, open source or API options, weighing both the development and long term overhead costs of each option.

2. Design

Next, we will work closely with your team to develop a detailed concept and create a roadmap for AI integration. This involves determining the required architecture, selecting appropriate models, defining the training dataset, and deciding between fine-tuning existing models or using prompt techniques to achieve the desired results.

3. Prototype

Building upon the design phase, we will create a proof of concept that serves as the foundation for your AI-augmented ecosystem. This prototype will allow us to assess the cost-effectiveness and performance of the proposed solution, enabling us to make necessary adjustments and optimizations before proceeding further. Our goal is to find the most cost-effective and best-performing setup.

4. Implementation

With the prototype ready, we scale up and bring our design to life. Our goal is to make it accessible to your company as early as possible, smoothing out any kinks to ensure you can fully leverage your new AI solution.

5. Follow-up

We always follow up on our projects to ensure end-user satisfaction with the delivered solution. Keeping up with the latest trends, we can also provide updates if needed.

Let's Work This Out Together

Implementing Generative AI into your IT infrastructure can be a challenging yet rewarding endeavor.

Our fully integrated and fine-tuned systems perform with over 95% recall and accuracy. However, what is even more important is the return on your investment. With AI-augmented ecosystems, enterprises can now reduce the time spent on menial tasks by 50%, resulting in cost savings of the same magnitude.

https://www.youtube.com/watch?v=LMd72joPpmo&ab_channel=Hiflylabs

If this article left you wanting, check out this webinar on where we currently are with GenAI and LLM integration into company workflows, software and knowledge bases.

Three industry experts will lead you through the ropes. Tony Aug, from Nimble Gravity takes the lead as the moderator. Kristof Rábay, Hiflylabs' Lead Data Scientist, showcases the tangible business value of Generative AI in a live demo. While Pujit Mehrotra, CTO of Legislaide, explores GenAI in legal processes.

With the right guidance and strategic planning, you can harness the full potential of AI to revolutionize your business operations.

Consultancies like ours are equipped to navigate you through this journey, providing expert-guided implementation, quick delivery on proof of concepts and hands-on product demos for your workforce to adapt the new solutions more easily.

We are also open to just bouncing ideas together. Give us a call, and have at us!