What do tinkering at home and working at a data & AI company have in common? While there’s no punchline here, a useful intersection of these seemingly unrelated areas is digital twinning. When one of our clients pitched the idea about an IoT coffee machine to showcase the tech, the cogs in my head started turning: how do I combine hobby and expertise to create a small-scale example?

But what is a digital twin?

In short, digital twins are used in various cases to simulate complex, large-scale processes. This, in turn, allows for in-depth modeling, analysis, and predictions pertaining to a wide array of functions. Think manufacturing, where machinery often spans multiple buildings, and factory-wide overviews are a must.

We’ve done such projects in the past; head over to our case studies to find out how an aluminum manufacturer eliminated defects in its products. In the manufacturing or energy industries, where every second of downtime could cost a small fortune, being aware of every single detail is crucial. This is where digital twins shine.

Simply put, by utilizing a set of different sensors and creating a digital version of existing, often gigantic machines, you can monitor various components and features in real time, anywhere. From mining to processing and extrusion, keeping an eye on oil pressure, temperatures, and vibrations can help predict amortization and damages. This allows you to schedule maintenance periods with ease, resulting in virtually no unexpected downtime and immense cost savings down the line.

The scale can vary, and even though it’s not an entirely new concept, many are unfamiliar with it. We wanted to showcase how its capabilities can come in handy even for something common. At Hiflylabs, we worked with digital twins before and have practical experience with AI and data analytics in manufacturing. So the task wasn’t a novelty, but the company, being a team of data experts, tinkering was previously off the table.

Understanding the basics

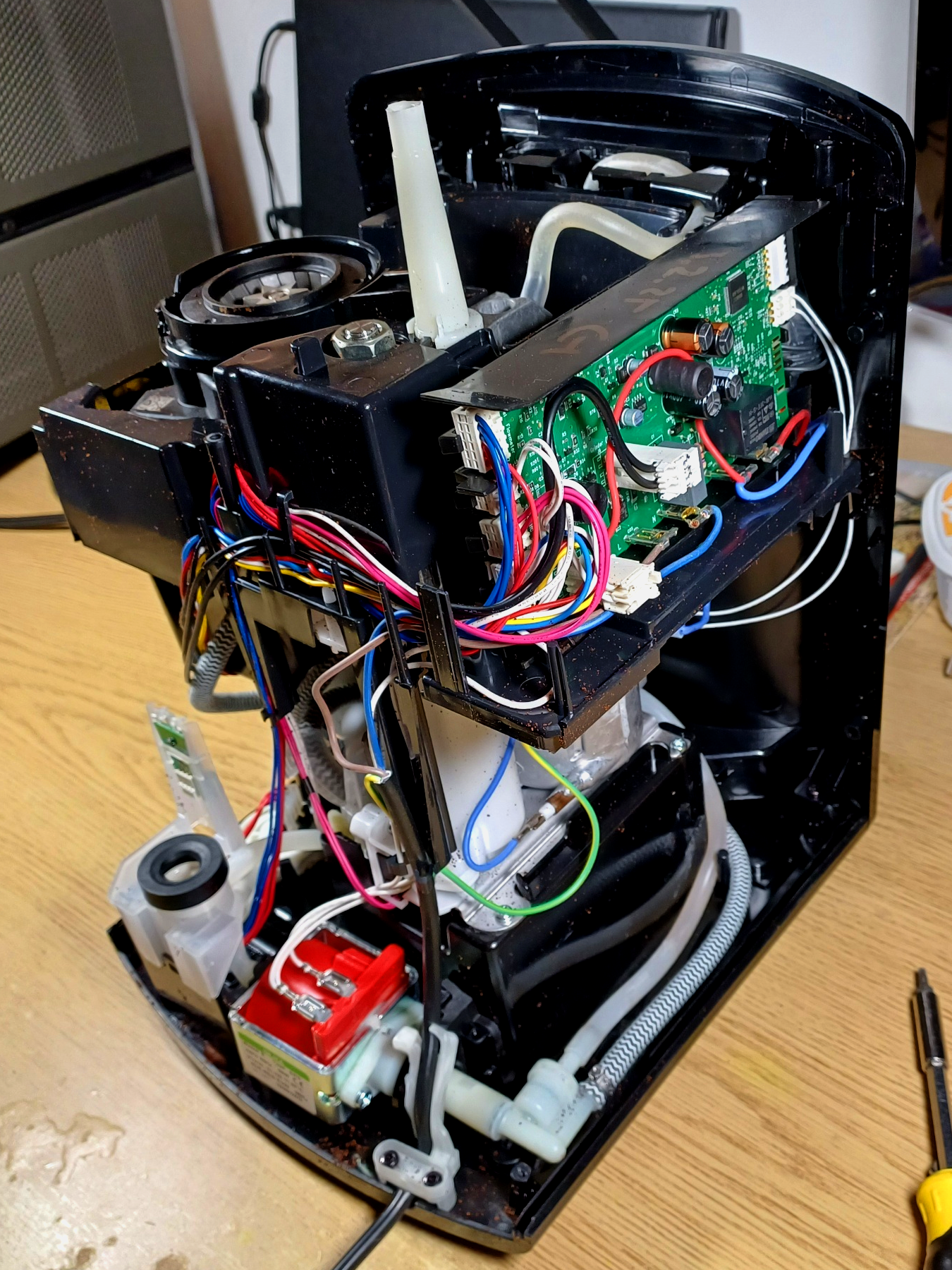

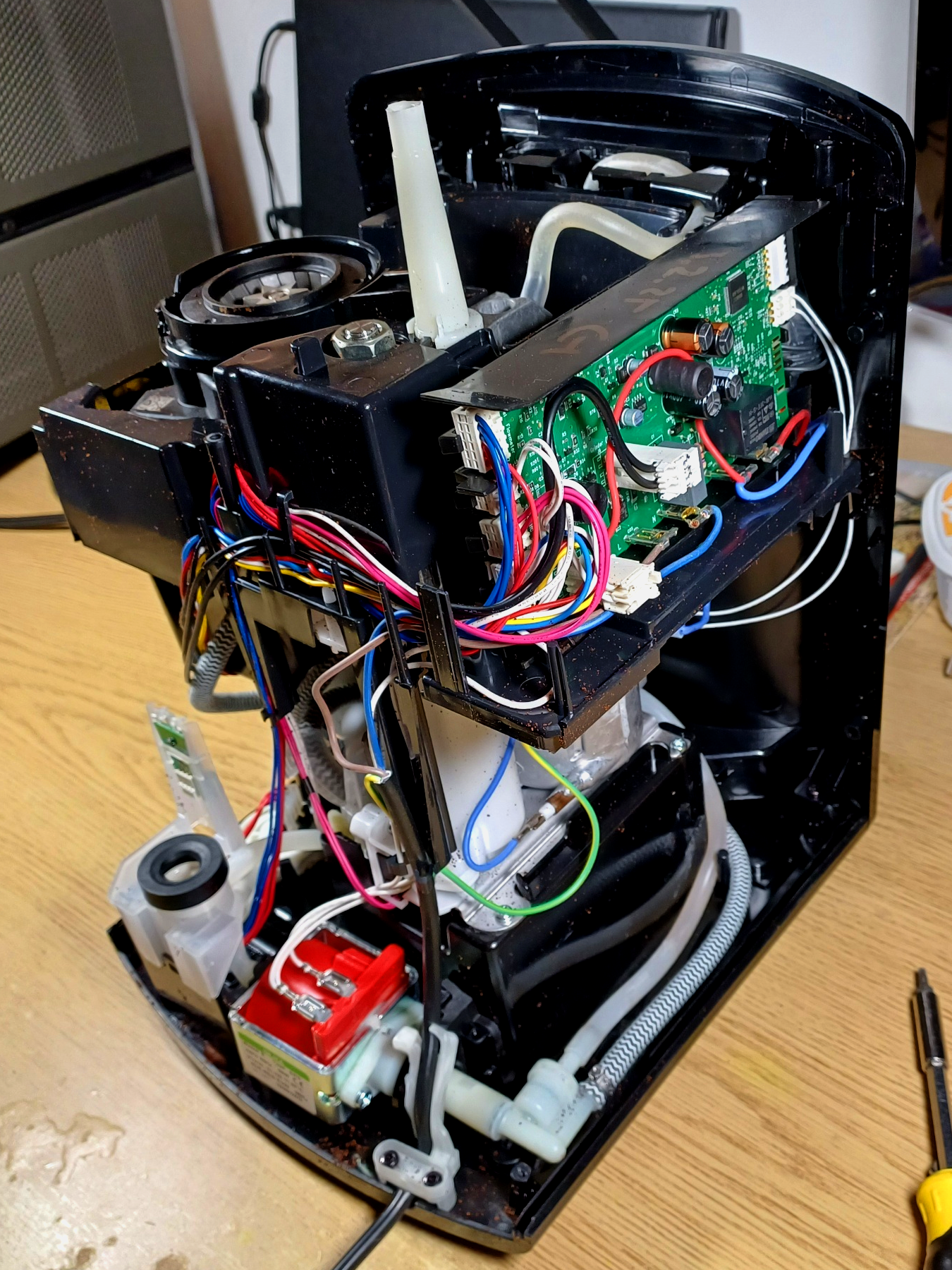

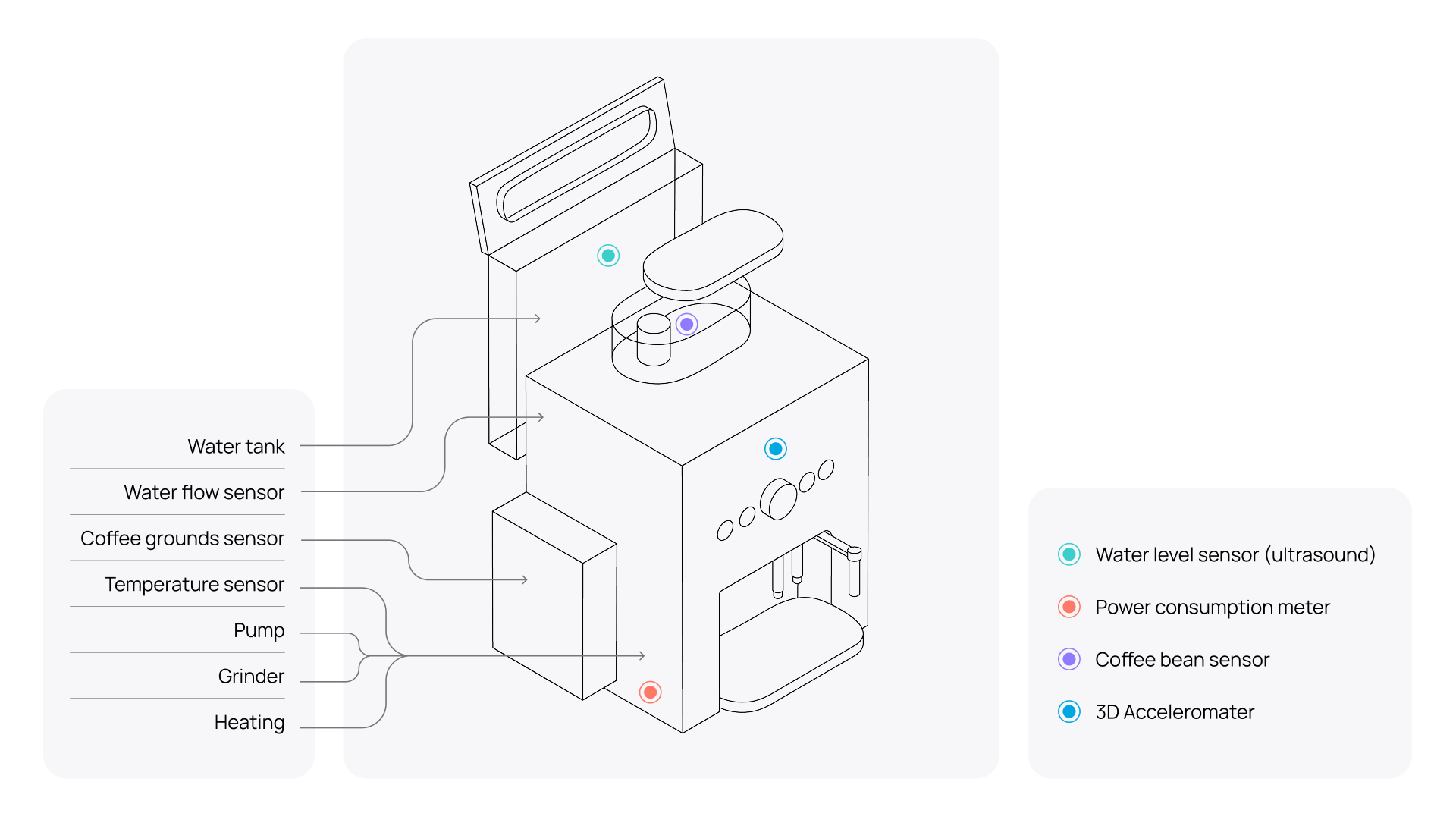

The process started with some reverse engineering (to put it less nicely, I tore the machine down to bits to see what we were working with). Other than the regular functions of monitoring water levels, flow, heating, whether the pump and the grinder were on, and whether the coffee grounds tray was attached, I noticed something cool. As opposed to other regular coffee machines, this one didn’t have a press and used a hydraulic solution instead.

After getting a grasp of the mechanics, it was time to see how the electronics operate. It’s not the most complicated thing, since the functions it needs to cover are pretty bare-bones, but it gave us the outline for the upgrades down the line.

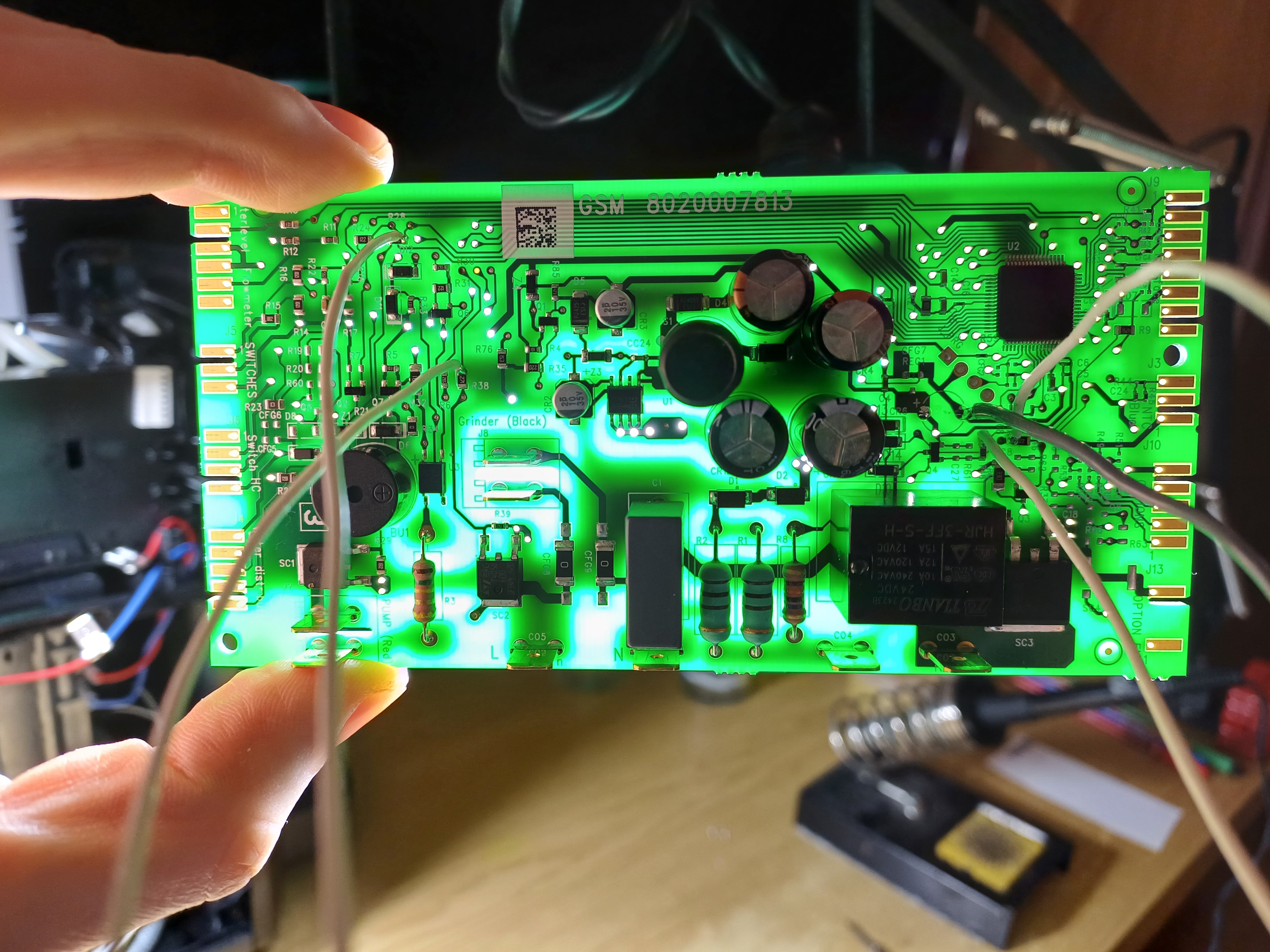

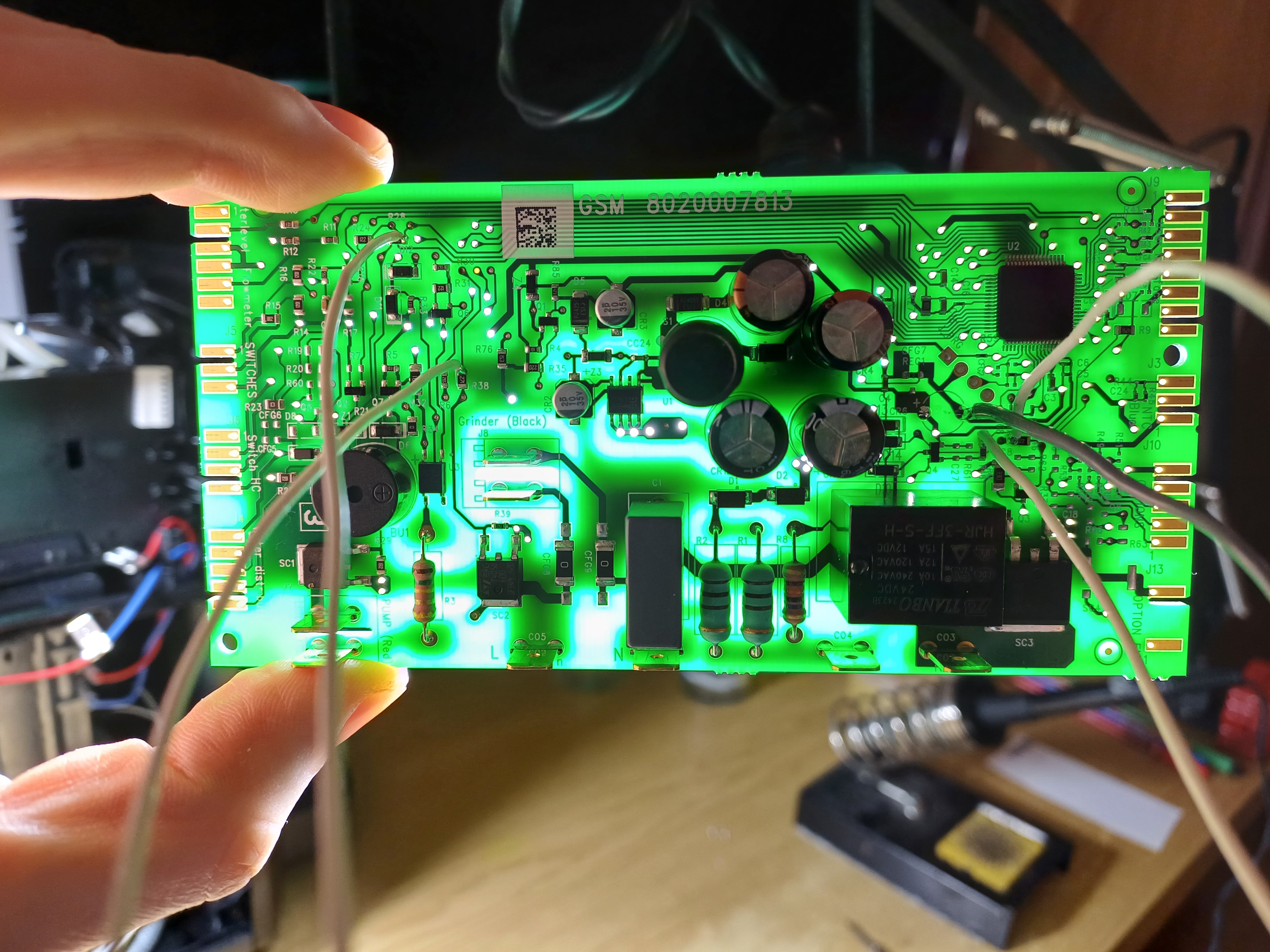

Reverse engineering was very exciting, allowing me to discover all the traces on the original control panel PCB, and decode the signals and protocols. For example, there were some analog level lines, such as the front panel buttons, where the different buttons were represented with different voltage levels.

Spicing up our coffee

Now comes the fun part, and a bit of frankensteining.

We needed something that allowed us to connect the machine to the network. Just go for a Raspberry Pi, right? Other than being a bit of an overkill in terms of performance, a Pi physically wouldn’t have fit either, so we looked into other Arduino-like microcontrollers.

We settled for the ESP32 by Espressif—there’s definitely an espresso pun in there somewhere, but that’s not why we went with it. Rather, it’s small enough and has more than enough performance to do what we need. However, the coffee machine itself operates with a 5V logic level while the ESP32 works with 3V3, so a level shifter was required. For some reason, though, the factory-made panel was oscillating, so I custom-made one that ended up working well.

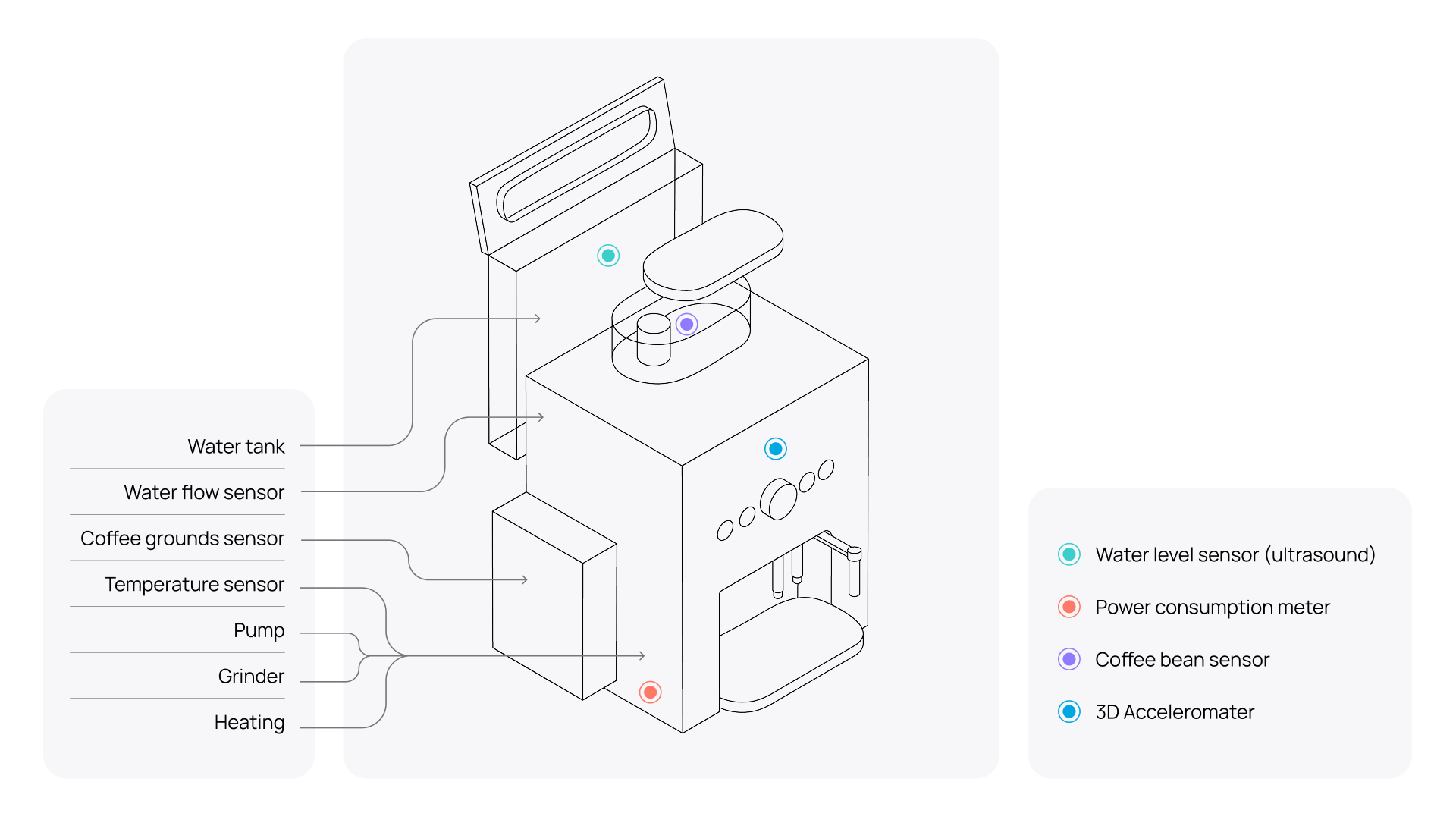

Next up, all the unique bits we equipped the machine with:

- An ultrasonic sensor to check water levels

- A power consumption meter

- An optical infrared sensor to monitor coffee beans

- A 3D accelerometer to detect uneven surfaces and irregular vibrations

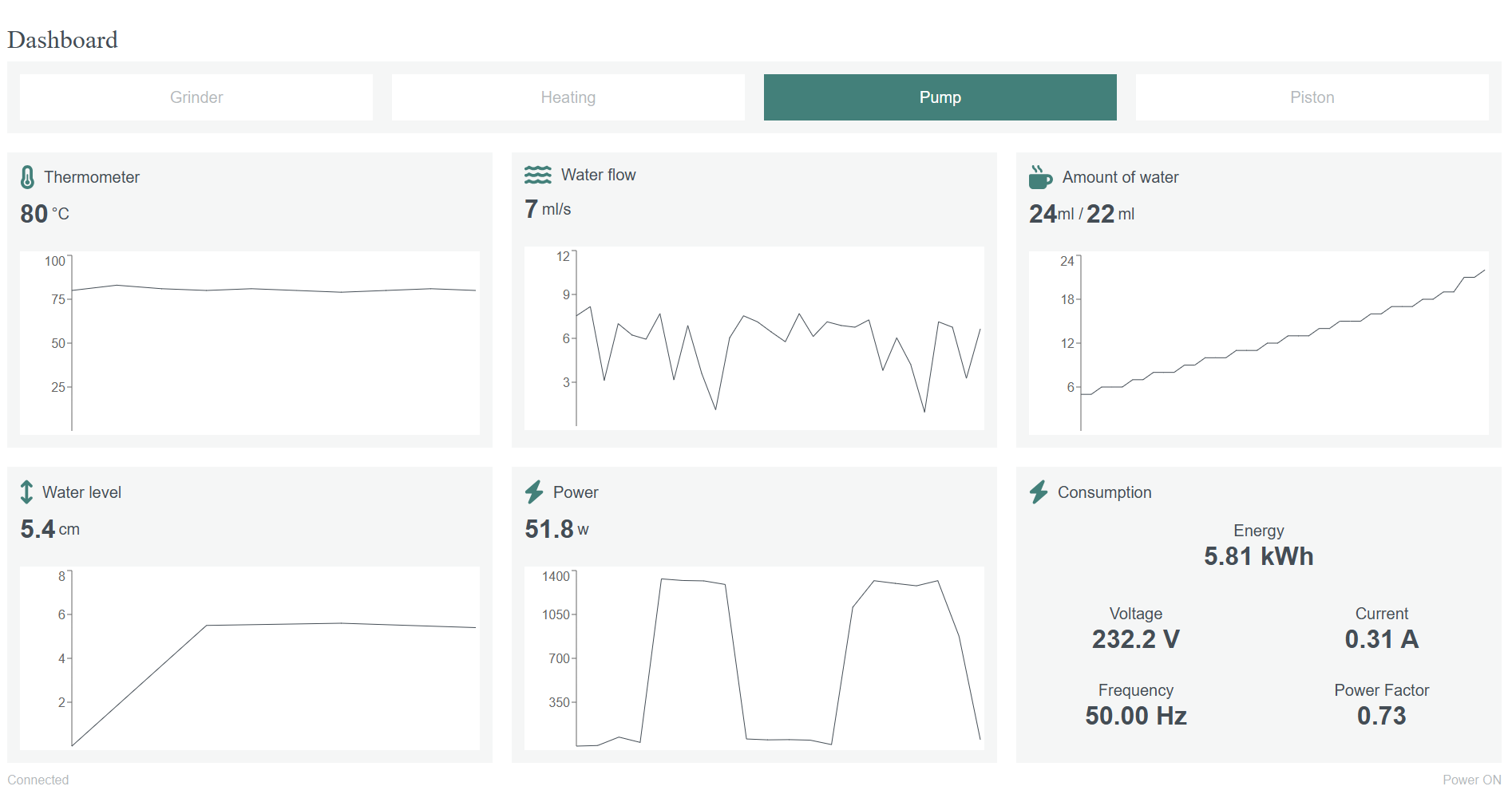

It allowed us to monitor that, for example, brewing a cup of coffee boosts power use to around 1kW for a bit, while the pump and grinder together use less than 40W. Talking hypothetically and on a larger scale, this is extremely useful information, as it allows us to see how external factors like changes in temperature affect power consumption.

On a smaller scale, this was the code responsible for transmitting power consumption data to the dashboard:

void managePowerMeasurement() {

#ifdef DEV_MODE

return;

#endif

if (powerOnValue == ON && currentTime - lastPowerMeasurement >= 250) {

voltage = pzem.voltage();

current = pzem.current();

power = pzem.power();

energy = pzem.energy();

frequency = pzem.frequency();

factor = pzem.pf();

if (!isnan(voltage) && voltage > 0 && (

abs(voltage - lastVoltageSent) > 1 ||

abs(current - lastCurrentSent) > 0.5 ||

abs(power - lastPowerSent) > 1 ||

abs(energy - lastEnergySent) > 0.1 ||

abs(frequency - lastFrequencySent) > 0.1 ||

abs(factor - lastFactorSent) > 0.1

)) {

sendData("POWER_VOLTAGE" , "%.1f", voltage);

sendData("POWER_CURRENT" , "%.2f", current);

sendData("POWER_POWER" , "%.2f", power);

sendData("POWER_ENERGY" , "%.2f", energy);

sendData("POWER_FREQUENCY", "%.2f", frequency);

sendData("POWER_FACTOR" , "%.2f", factor);

lastVoltageSent = voltage;

lastCurrentSent = current;

lastPowerSent = power;

lastEnergySent = energy;

lastFrequencySent = frequency;

lastFactorSent = factor;

}

lastPowerMeasurement = currentTime;

}

}

Of course, we had to tweak a few things to make it work properly. Some of the sensors were extremely sensitive by default, such as the accelerometer, alerting us about even the regular operation of the machine. This part was more of a software development challenge than a tinkering one. Each component had its own libraries and protocols requiring a unique setup.

Bringing it online

While the ESP32 has Wi-Fi, there’s nothing quite as reliable as a wired connection, so to avoid potential interference, we went with a good old USB cable.

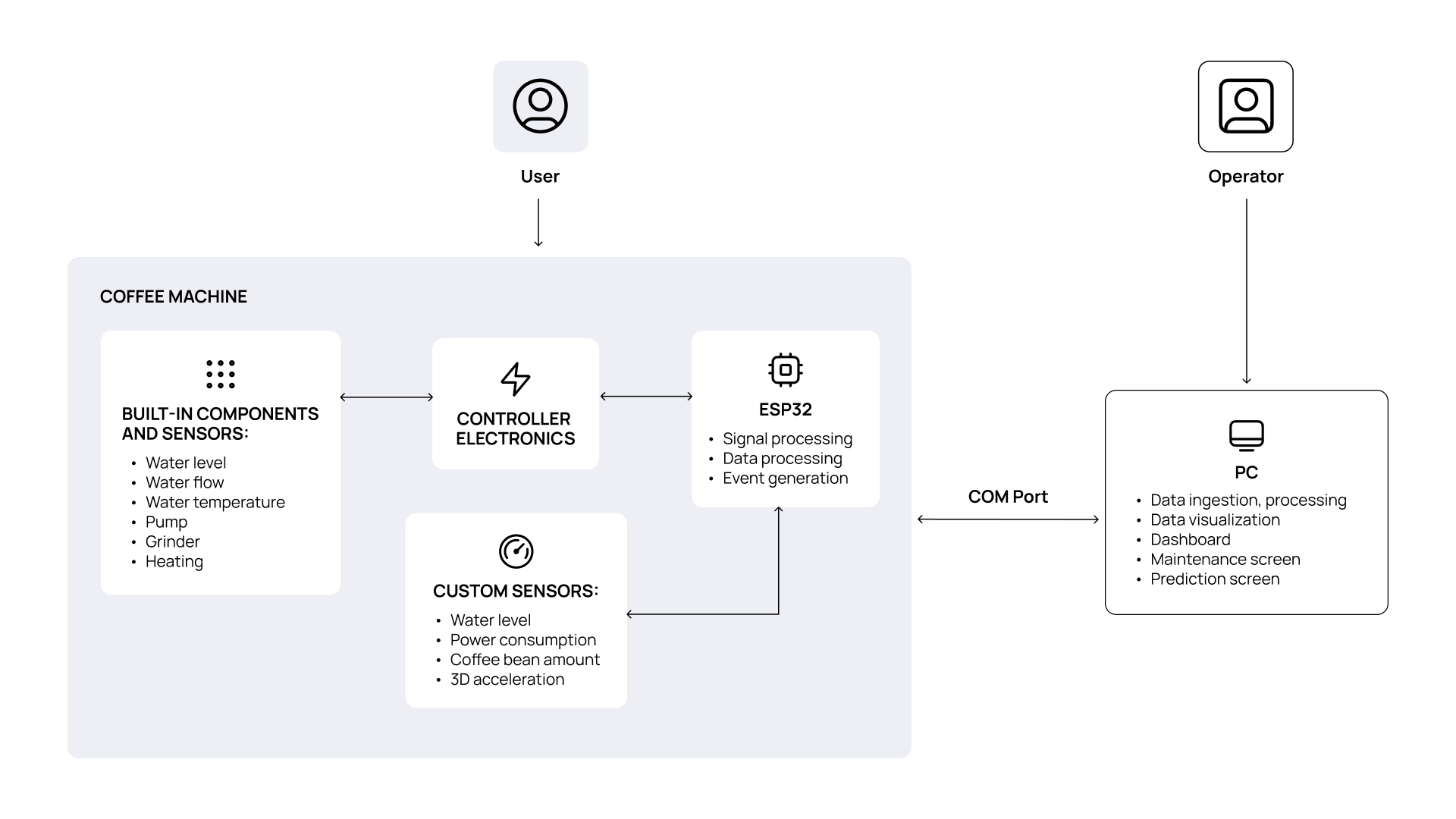

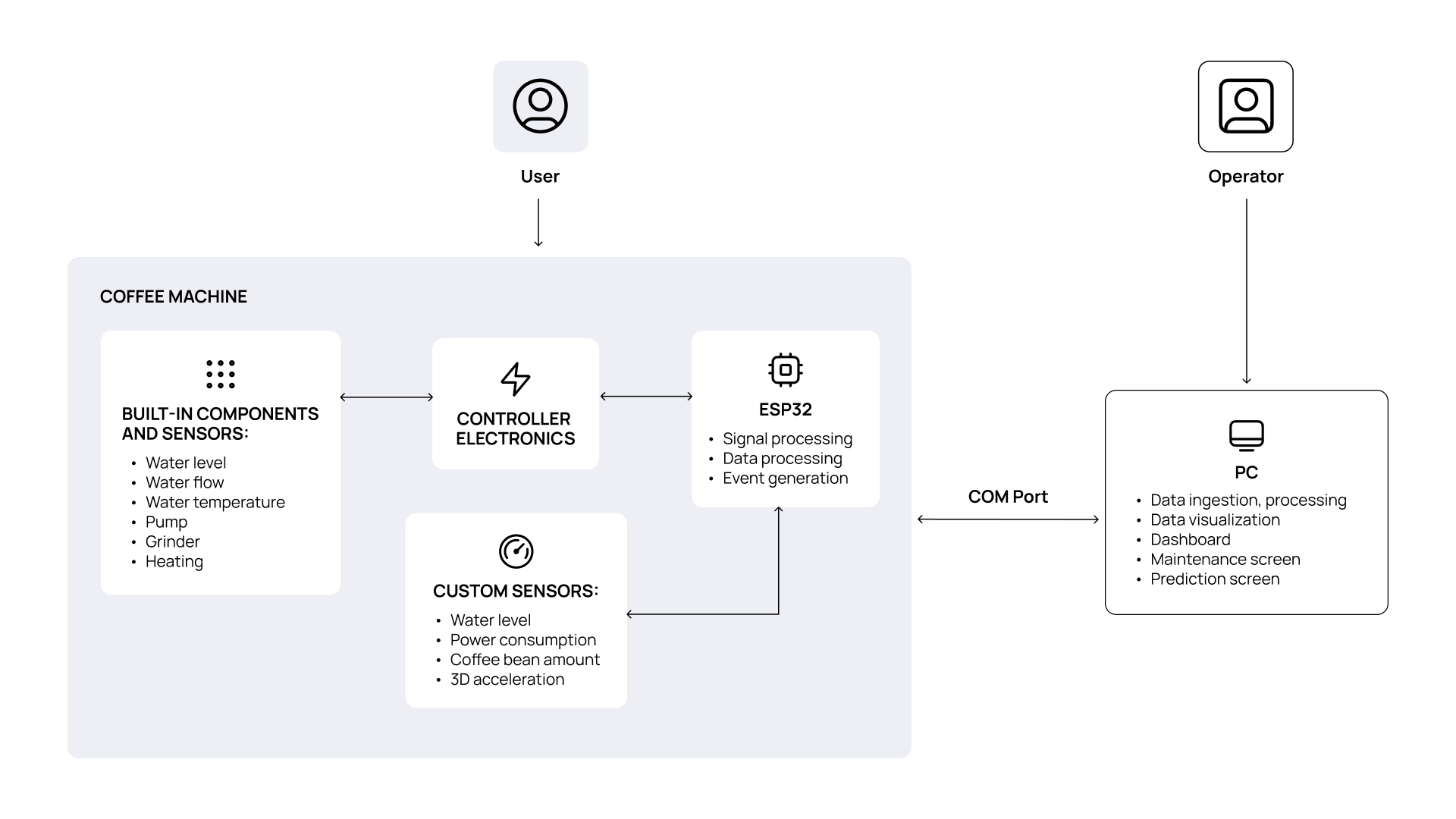

Before handing over the data for our dashboard, some of the logging had to be tweaked on the coffee maker’s side. Take, for example, the grounds tray: you’ll never need real-time information on its status, so some of these data transmissions were changed to be event-based on the ESP32 itself. After all that was done, this is what the information flow looked like between the machine and the PC the dashboard ran on:

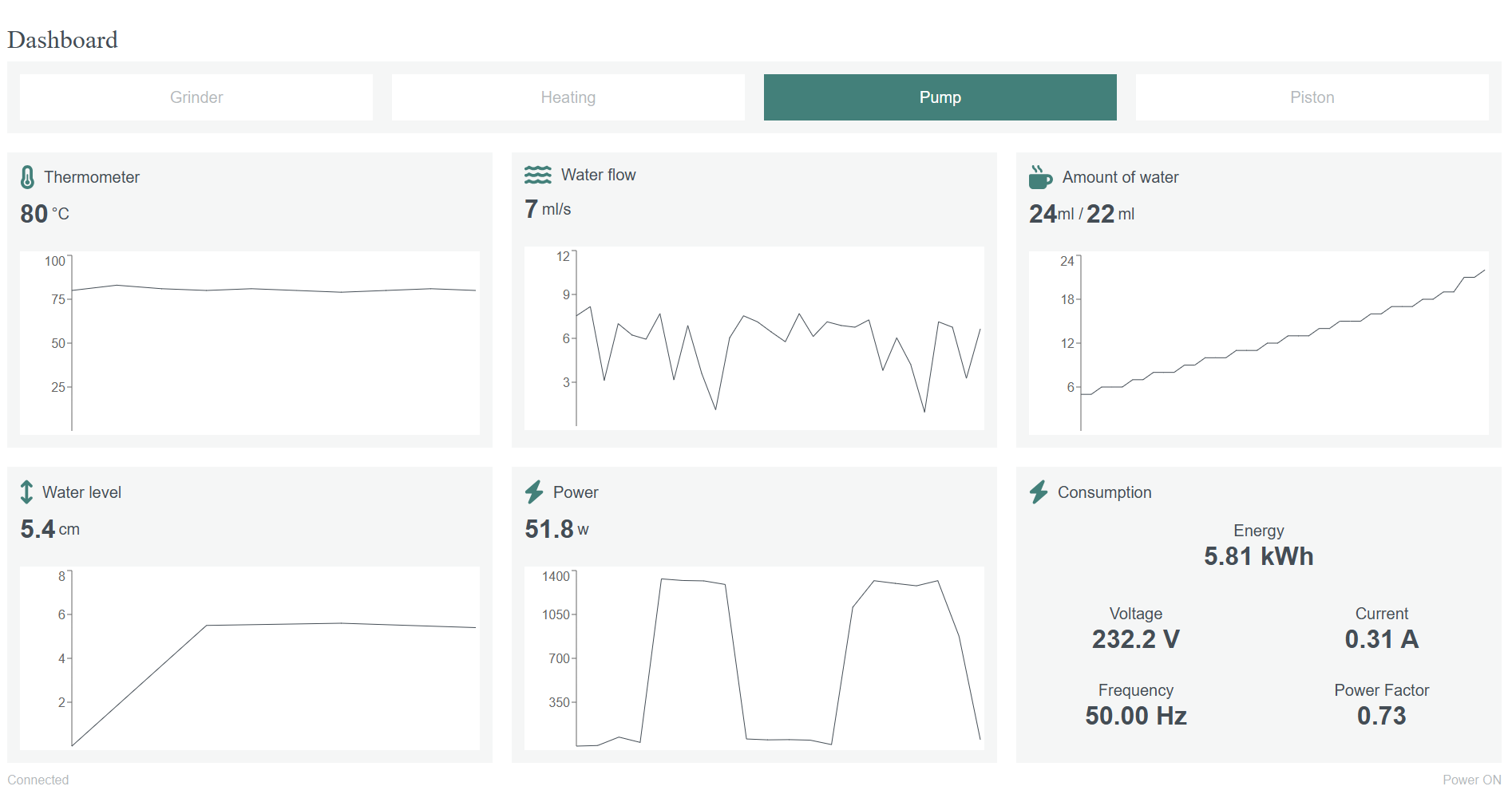

The data was then handed over to a browser-based frontend where we created a dashboard with three different screens. Here’s the code that handled analog data transmission:

void manageAnalogReads() {

if (currentTime - lastAnalogRead >= 10) {

endSwitchValue = analogRead(END_SWITCH);

waterDistributorValue = analogRead(WATER_DISTRIBUTOR);

buttonsValue = analogRead(BUTTONS);

ntcValue = analogRead(NTC);

waterQuantityValue = analogRead(WATER_QUANTITY);

// manage the discrete state but analog input components

manageButtonsState(buttonsValue);

// send the analog sensor levels at every 0.5s

if (powerOnValue == ON) {

// manage the discrete state but analog input components

manageEndSwitchState(endSwitchValue);

manageWaterDistributorState(waterDistributorValue);

#ifndef DEV_MODE

sendAnalogValues(ntcValue, waterQuantityValue);

#endif

}

lastAnalogRead = currentTime;

}

}

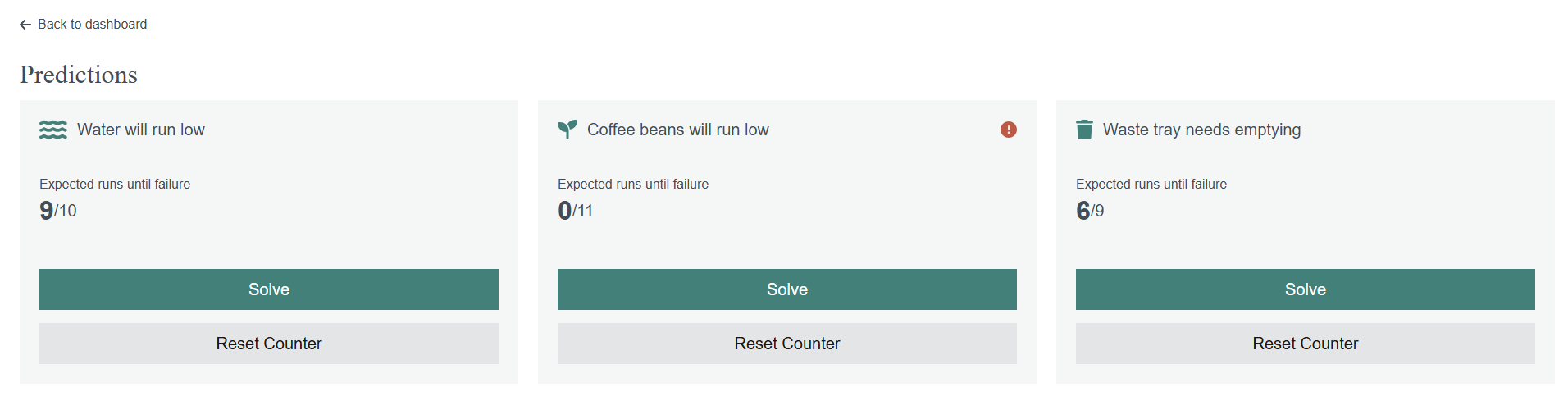

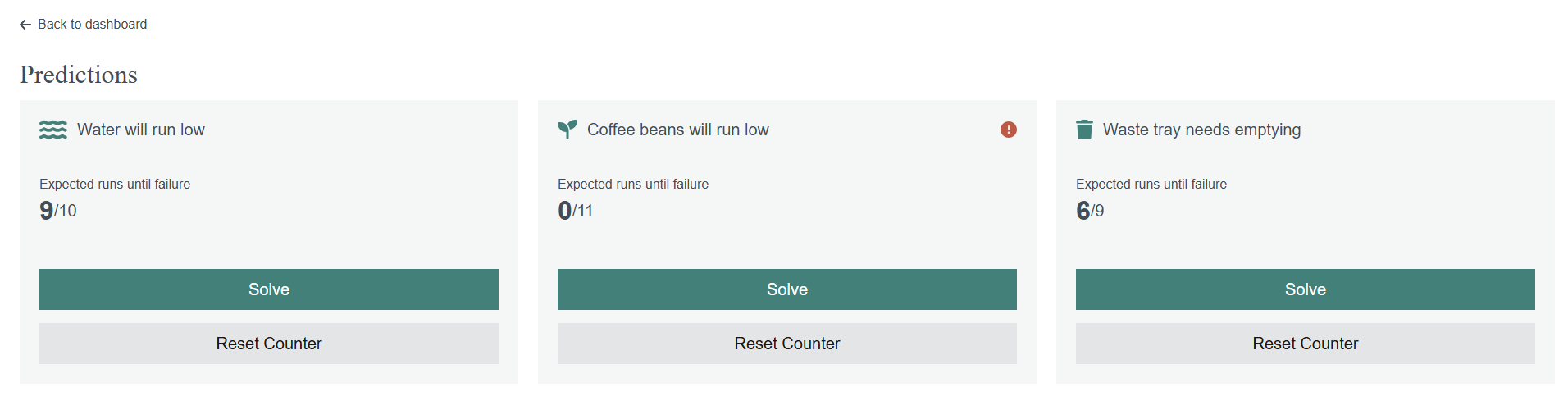

One of the dashboard screens was a default overview with all real-time data, another allowed basic maintenance of the internals, such as resetting timers/counters, while the last one was a predictive dashboard. This is the showstopper and the goldmine in larger-scale uses.

Predictive analytics allows you to forecast future maintenance, resulting in lower downtimes and saving you money down the line. In the case of the coffee machine, I went with a basic implementation, estimating how much coffee we can still brew before having to refill something. On a longer time scale, it would also be able to forecast limescale removals based on water quality—but that’s a use case for months and years.

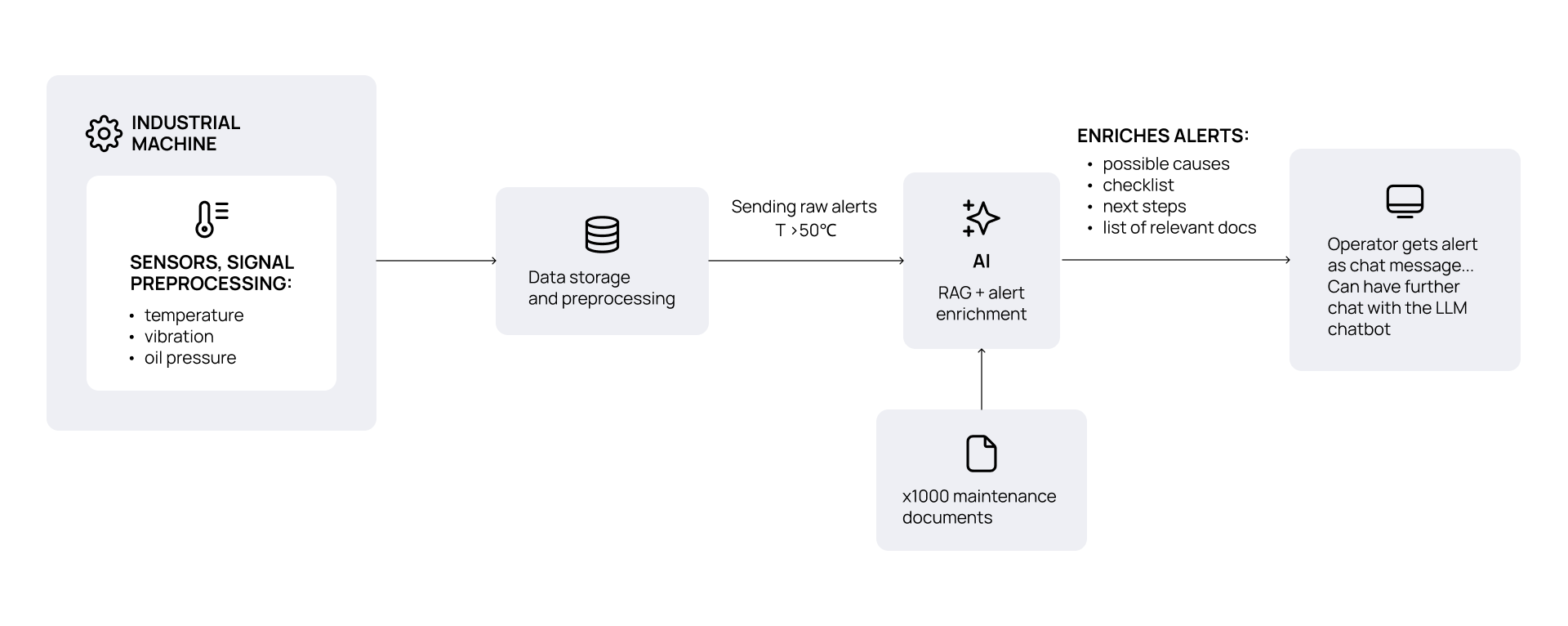

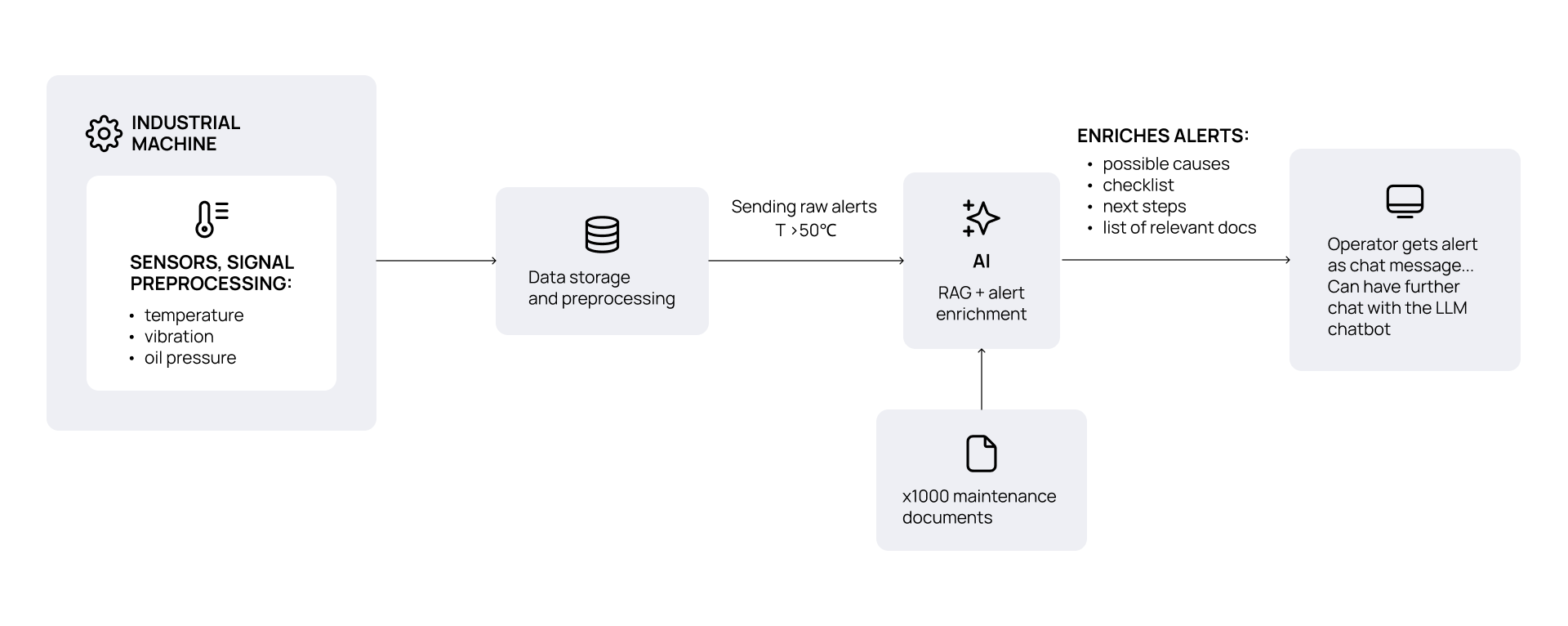

Another future improvement could contribute to quality assurance: for example, by adding a heat sensor, we could monitor coffee temperatures. Alternatively, you could vastly improve user experience by adding real-time alerts when certain thresholds are reached, as well as creating a to-do list for them based on these. With a RAG-based solution, you can even enrich these alerts to refer to certain parts of the user manual, making sure that it’s not just a raw, technical alert, but useful and understandable information.

Even with that much room for upgrades, the machine has been the star of many presentations and shows at various sites of our client. It’s already been to Oslo, and in a few weeks, it’s about to be the highlight of an IoT showcase in Amsterdam.

Scaling it up

In real-world enterprise environments, digital twins are used on a much larger scale than just coffee machines. Practically applying AI ontologies into your processes allows you to build a brain for your organization and optimize your workflows, customized to your specific environment.

By logging and ML-powered analyses of results from your digital twin, you can identify points needing intervention easily and in time, enabling you to schedule maintenance ahead, saving precious time and immense costs to organizations.