Bimodal Approach to GenAI Application Architecture

Dual-speed architecture helps you ship stable apps and iterate on AI fast—one product, two release pipelines, and clear contracts between them. Here’s how.

If you're involved in marketing, PR, or account management, AI language models probably already play a role in your content creation, copywriting and email campaigns. But are you familiar with Chain-of-Thought prompting? This method can enhance the efficiency of language models, particularly for tasks demanding intricate reasoning or extended context. Enhance your outcomes right away with our article!

In the recent months, we've diligently researched how various industries utilize large language models. Our series has started with exploring the legal sector, and we plan to dive into retail use cases soon. But the focus of this current article is on marketing, and how the technique of chain prompting can take the efficiency and quality of content creation to the next level.

Large language models (LLMs) are transforming the marketing industry, automating tasks like copywriting, email campaigns, and research. But most marketers only use language models in a simple way, giving them a prompt and stopping at the first answer they get.

This is a mistake. LLMs have many limitations, including:

To get the most out of generative AI and bypass most of these limitations, you need to use a technique called Chain-of-Thought prompting (or chain prompting for short), which involves giving the LLM a series of instructions that build on each other, guiding the AI step-by-step. This allows you to get more comprehensive and accurate results.

In this article, we will discuss the benefits of different chain prompting patterns to improve the quality of your workflows.

Guiding an AI model step-by-step can be done in a number of different ways. These patterns are not equally matched, but serve different purposes and applications.

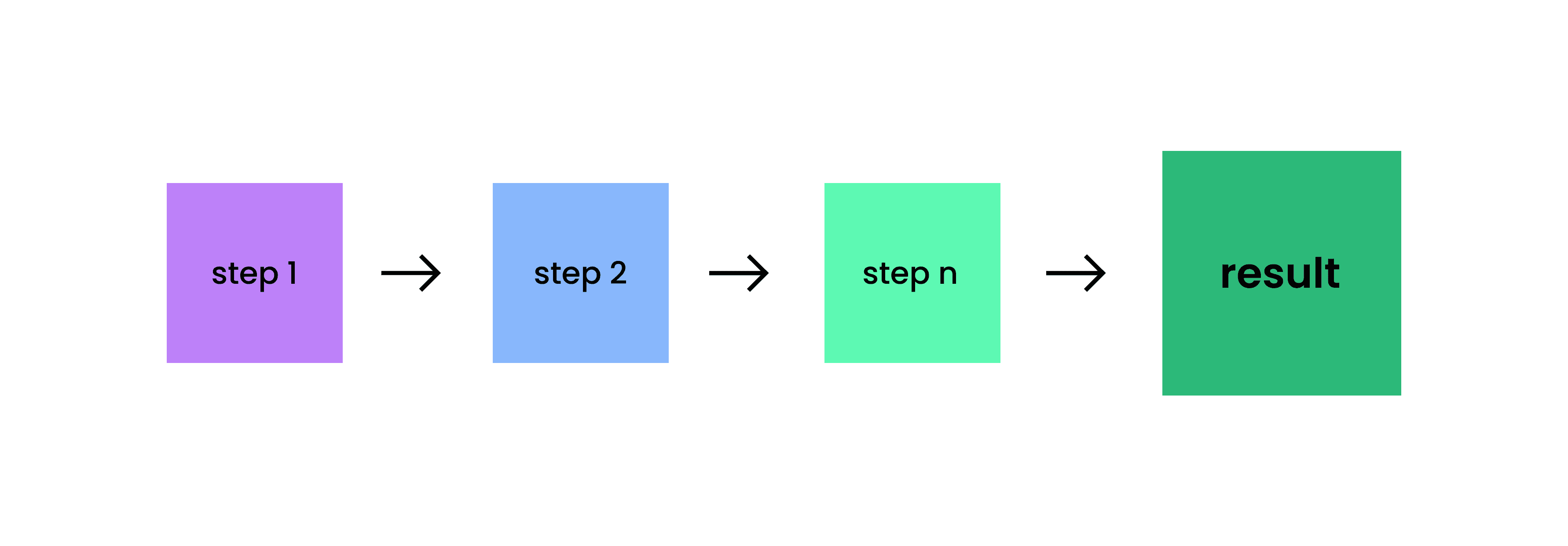

Simple and straightforward, you give one instruction after another, iteratively building towards the best possible output for your needs. This is the most basic pattern of Chain-of-Thought prompting, and is also called Cascade prompting.

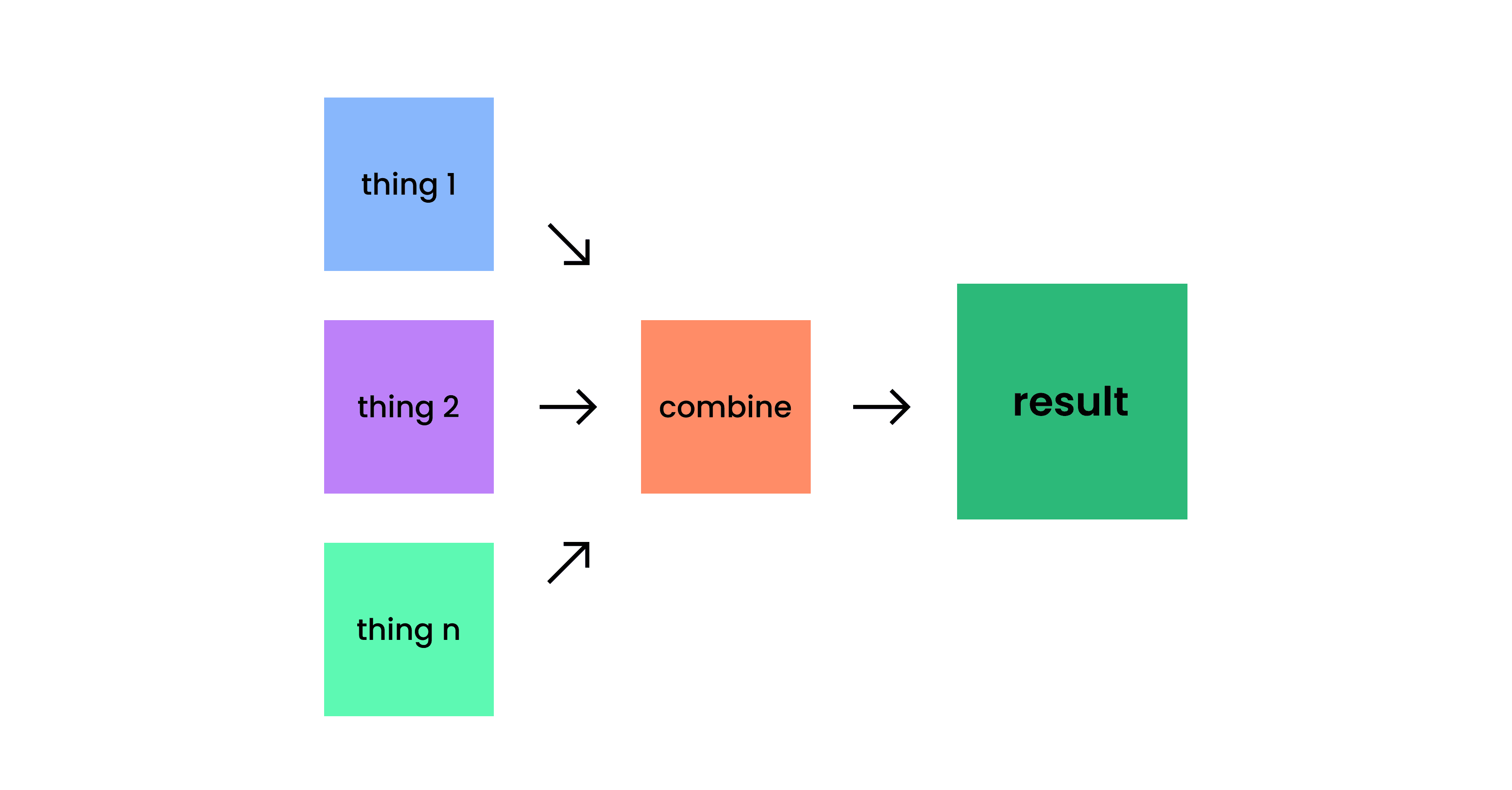

When the steps in your workflow are not building off of each other, you can work on them in parallel sessions (each session consisting of its own sequential prompt chain). Then—once you’re satisfied with each—you can combine the outputs for a comprehensive overview.

Having a project with multiple (but very different) dependencies would be a good use case for this usage pattern. For instance, you might need to organize an event, and consider aspects like catering, finances, event photography, attendee invitations, decoration, entertainment, and so on—and may only combine subtasks related to all these into a definitive operational checklist once you’ve explored the span and depth of each facet individually.

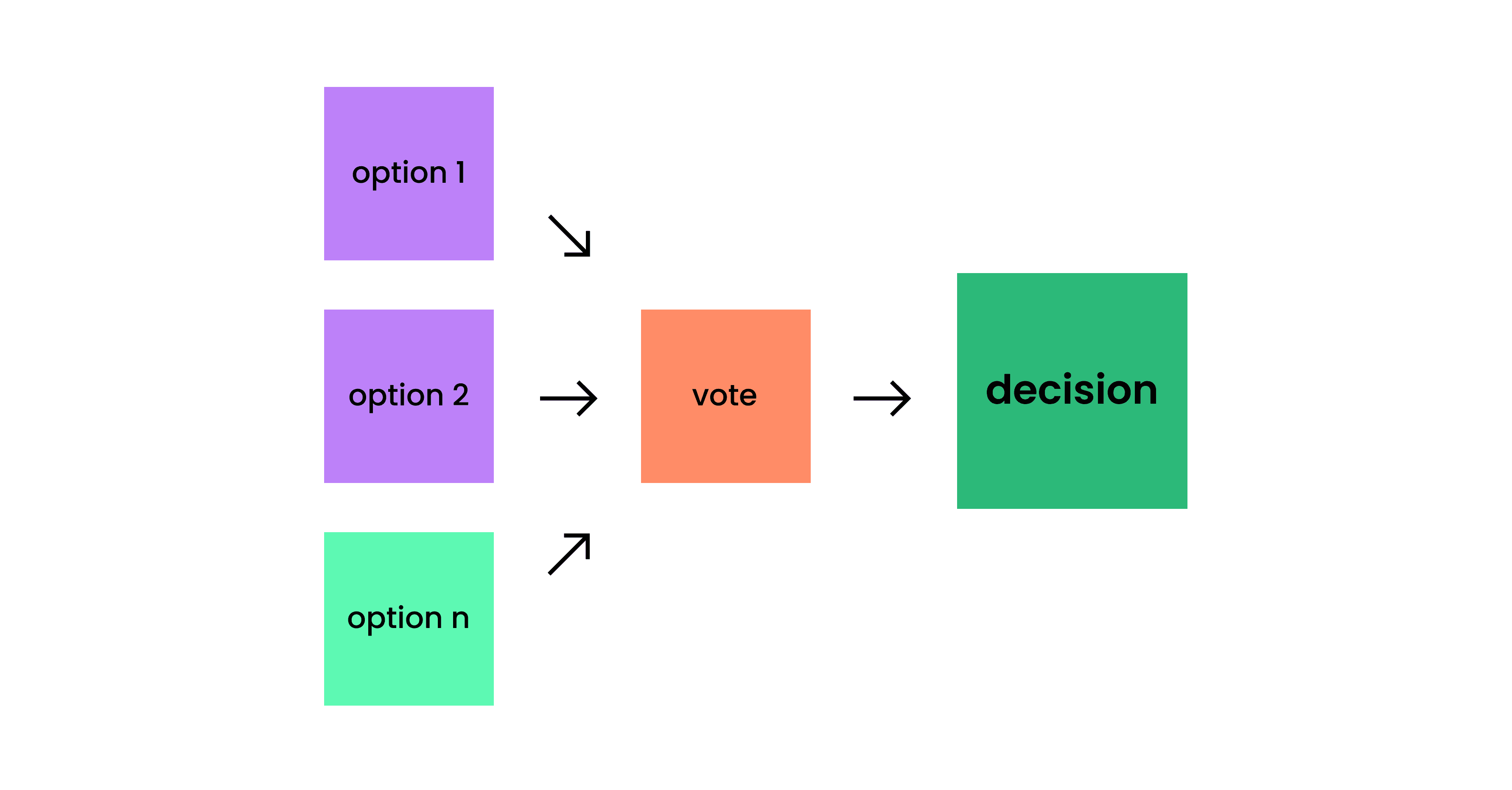

If you’re facing a dilemma, or exploring multiple options that are not mutually exclusive with each other, you can utilize AI to determine a path to prioritise.

You would first present the sticky situations and your options to the language model, and ask for its opinion. Then you’d do this again, and again, probably even changing the wording of your question from time to time just to correct for bias. In the end, you will have the answer that is statistically the most popular. This way, you’re sampling for the best probable option with the highest self-consistency, resulting in an answer with a higher chance of being objectively right.

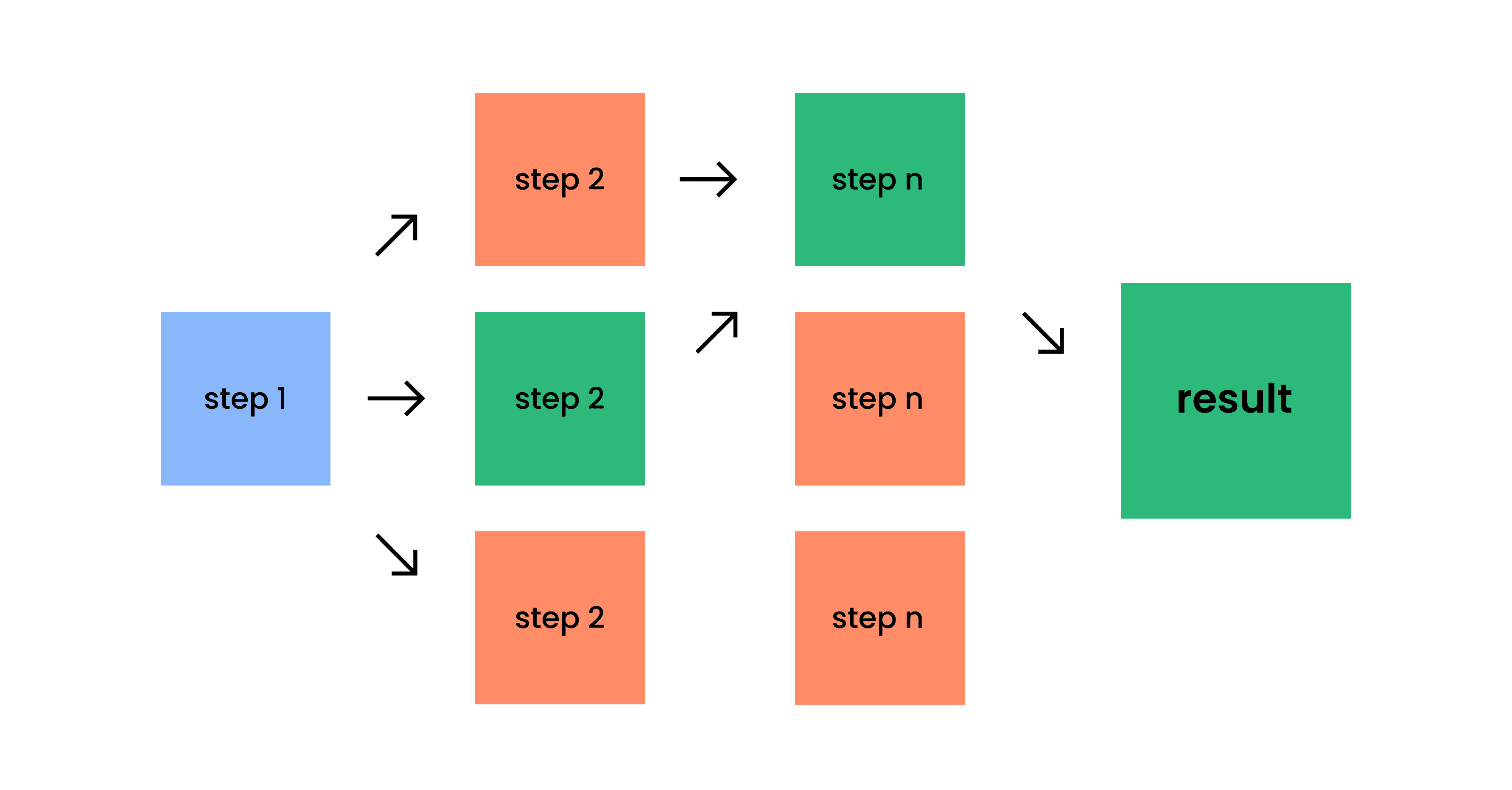

When the problem you’re facing is more complex and has more possible solutions at the same time, you might want to combine previously mentioned methods. This approach was dubbed Tree of Thoughts in a research paper written by Yao et al and combines practices from all three aforementioned prompting patterns.

The Tree of Thoughts is combining previously mentioned methods: parallel chains, sampling for the best option, and exploring sequences (branches) one step at a time.

All of the above patterns might require internal loops to double-check the model’s understanding and alignment with user needs and quality standards. This method is also referred to as Reflective prompting, and can include external feedback loops, as well as self-evaluation.

No matter who made the language model you’re working with, it’ll have certain model-specific strengths and limits. For further optimization, you can go through your preferred prompt chain pattern with different AI models, compare their results, or even try to make them evaluate and improve each others’ outputs.

OpenAI’s GPT, Google’s Bard, Anthropic’s Claude and any open source model you train or refine will have different approaches and write in uniquely different styles. It is always worth getting at least a second opinion from another model than the one you usually work with.

Prompt chaining is powerful, but should only be considered when it’s truly necessary.

The longer and more complex the chain, the more it takes to complete a task from start to finish. If an application is cost or time-sensitive, it makes sense to bring down the number of chains as much as possible.

Writing effective prompts can minimize the need of Chain-of-Thought reasoning on simpler tasks.

With that in mind, let’s look at individual prompts that can be useful in content creation, ideation and learning—or inside a prompt chain.

This assortment of prompts encompass a range of tasks designed to enhance written content, foster creativity, guide AI responses, role-play in specific tones or personas, and assist in understanding code. They emphasize clarity, precision, critical thinking, and tailored communication. Click the link for the collection!

The great achievement of language transformers has been revolutionizing human-computer interactions. Plain English, natural human language, is now the new major user interface.

The barrier between conveying your wants and seeing them realized will only crumble further away as multimodal prompting, artificial general intelligence and semantic decoding rises in upcoming years.

Just as we’ve moved away from binary machine languages since the 1940s to coding in high-level, humanly readable programming languages, or how we’ve transitioned from clicking buttons to the more direct experience of touchscreens, so will the user experience evolve to be increasingly natural-feeling. Eventually, even today’s way of prompting could be redundant, and replaced with something closer to interpersonal communication—and eventually mind reading.

But even in the short-term, we will soon see unprecedented levels of democratization, accessibility and personalization of digital content creation. With all the drawbacks and risks technological advancement inherently comes with, we can find solace in one thing:

Creativity can roam more freely than ever.

Explore more stories