Have you ever tried to renovate a house while living in it?

That's exactly what many enterprises face with their data warehouses today.

These mission-critical systems need to keep running while somehow transforming to meet modern demands. It's tricky, but you don't actually need to tear everything down to build something better.

Recently, we worked with one of Europe's largest commercial banks facing the challenge of modernization. Their data warehouse, built over 15 years ago in Oracle, processed millions of transactions daily—everything from instant transfers to standing orders. It was slow, and couldn’t handle changing business needs. Unfortunately, fully replacing the legacy solution was not an option for them.

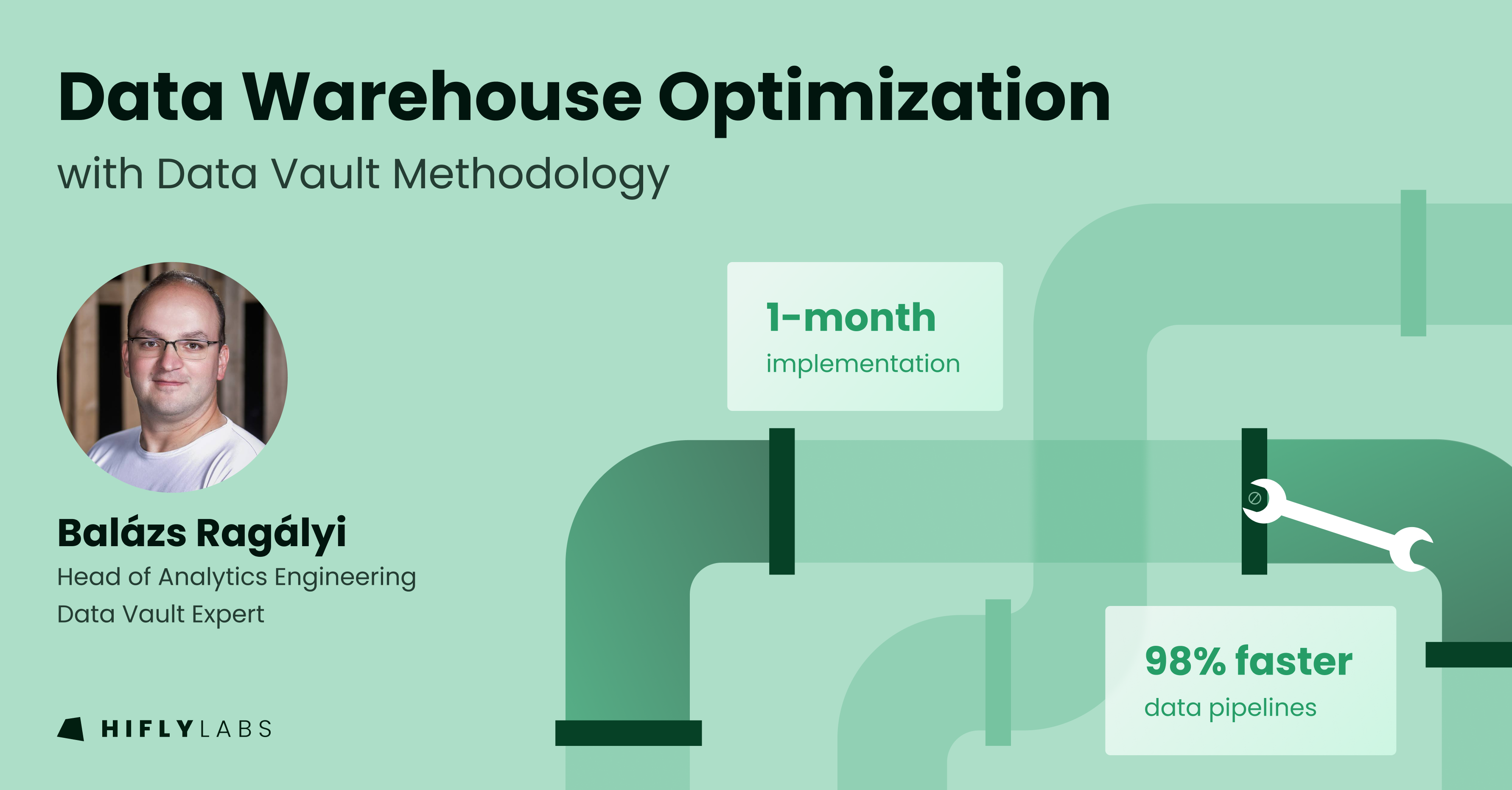

To solve this, we have drawn from the Data Vault methodology that has already proven to be useful. This time, instead of a full migration we had to optimize only the most burning pain points in a fast and targeted manner. We have wowed even ourselves, as we’ve managed to fix the most critical data warehouse components in 2 agile sprints, taking only 1 month of development time.

This article is the story of how we did it. And hopefully, you too will learn how to modernize your data warehouse incrementally using best practices from the Data Vault. No big-bang migrations. No system downtime. Just smart, targeted improvements that deliver immediate value while setting you up for future success.

Why are we renovating traditional data warehouses?

Legacy data pipelines, built on decades-old principles, are hitting their limits. Businesses process tons of data from multiple sources daily, but traditional architectures were designed when "real-time" meant "by tomorrow morning."

Four major pain points consistently emerge:

- First, there's the speed issue. In our recent client's case, a seemingly simple payment data consolidation from five sources was taking 4-5 minutes just for basic processing, with the entire chain of 25 downstream steps requiring 15-20 minutes to complete. Every single time.

- Then there's the complexity trap. Your data pipelines probably look like a plate of spaghetti, with multiple source dependencies and resource conflicts. One change here causes three problems there. Sound familiar?

- There's also the problem of historical data. Traditional warehouses often store only transformed data, making it impossible to reprocess historical information when business rules change. Once data is transformed, its original state is lost forever – and with it, the ability to answer new business questions about the past.

- Finally, there's maintenance. Every new business requirement means going deep into existing ETL processes, carefully adjusting them without breaking anything downstream. Like performing surgery on a horse you’re riding.

How can we modernize without migration?

Here's where things get interesting. Instead of replacing your entire data infrastructure or introducing tons of new tools, what if you could strategically modernize the parts that hurt the most?

Picking your battles: a real-world example

When that banking client approached us about their data warehouse challenges, they faced a familiar dilemma. Their payment pipeline pulled data from five different sources, feeding into 25 downstream processes. A complete overhaul would've been risky and expensive. Instead, we focused on their biggest pain point: payment processing.

Four tactical approaches to modernize data warehouse

1. Bridge the old with the new

Instead of forcing a complete transformation, create views on top of existing tables that represent data in a Data Vault format. This approach lets you gradually shift to modern patterns while keeping your current operations running smoothly. The bank's existing contract and currency tables? They kept working perfectly while the new structure took shape around them. If we’re thinking in terms of the Medallion Architecture, we could say that we have rebuilt part of the silver layer, applying a virtualized interface layer on top of it, to enable seamless changes for the business users.

2. Parallel processing architecture

Why wait in line when you can move in parallel? By adopting Data Vault's hub-link-satellite model, you can process multiple data streams simultaneously. Hubs store only hashed business keys while Satellites separate frequent updates from stable attributes, eliminating loading dependencies entirely. The bank's payment processing time dropped from 4-5 minutes to just 10 seconds. That's a 98% improvement without replacing their core systems.

3. Preserve raw data for future analytics

You can store raw data in its original form using satellite tables before any transformations take place. This creates a time machine for your data, allowing you to reprocess historical information whenever business rules change. For the bank, this meant they could finally analyze historical payment patterns using new business criteria – something that was impossible before. Switching from ETL to ELT is possible before your organization is ready to commit to establishing a full-blown lakehouse.

4. Automation is the long-term solution

Here's a secret: much of the modernization work can be automated. We developed tools that generate Data Vault components based on the client's current environment, dramatically reducing the effort needed for future development. Having an architect's template that you can reuse whenever needed pays off big time in the long run. We advise remodeling the warehouse using standardized automation that works across the entire architecture, from data sources to analytics!

Tangible results from incremental changes

You have seen the numbers.

The targeted process chain duration was cut in half, from 15-20 minutes to 8-10 minutes.

Payment processing time dropped from 4-5 minutes to just 10 seconds.

We achieved this in just one month, in two agile sprints, without any system downtime.

But the real win goes beyond metrics. Their data warehouse can now adapt to new requirements much more quickly. Business rules can change without requiring massive redevelopment efforts. And perhaps most importantly, they've created a foundation for continuous improvement.

This approach is like modernizing one room at a time, each improvement making the whole house better while keeping it perfectly livable throughout the process.

If you’re locked inside a legacy system, don’t despair. Start small. Pick your least favorite process. Apply Data Vault principles. Measure the results. Then decide where to go next.

Your data warehouse isn't just a system—it's a journey. And sometimes, the smartest path forward is one side-step at a time.

Balázs Ragályi, author of this article, has previously talked at the Budapest dbt Meetup about using the Data Vault with dbt. You can watch the recording of the presentation here.