Bimodal Approach to GenAI Application Architecture

Dual-speed architecture helps you ship stable apps and iterate on AI fast—one product, two release pipelines, and clear contracts between them. Here’s how.

In Part I., we argued in favor of using Superset as a free and open-source solution. Make sure to check it out beforehand to understand our dedication and excitement towards the project. Now, let’s dig into how we have test-driven Superset on top of other fascinating technologies.

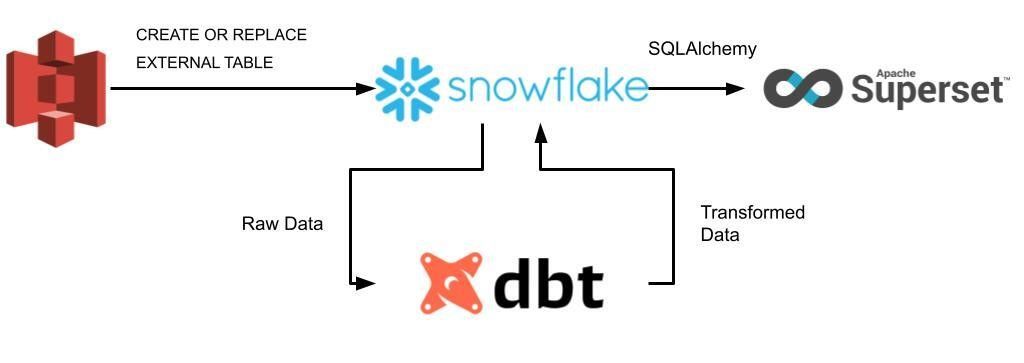

We set out to showcase how Superset can consume data from a centralized data store such as Snowflake and build a stack of promising technologies.

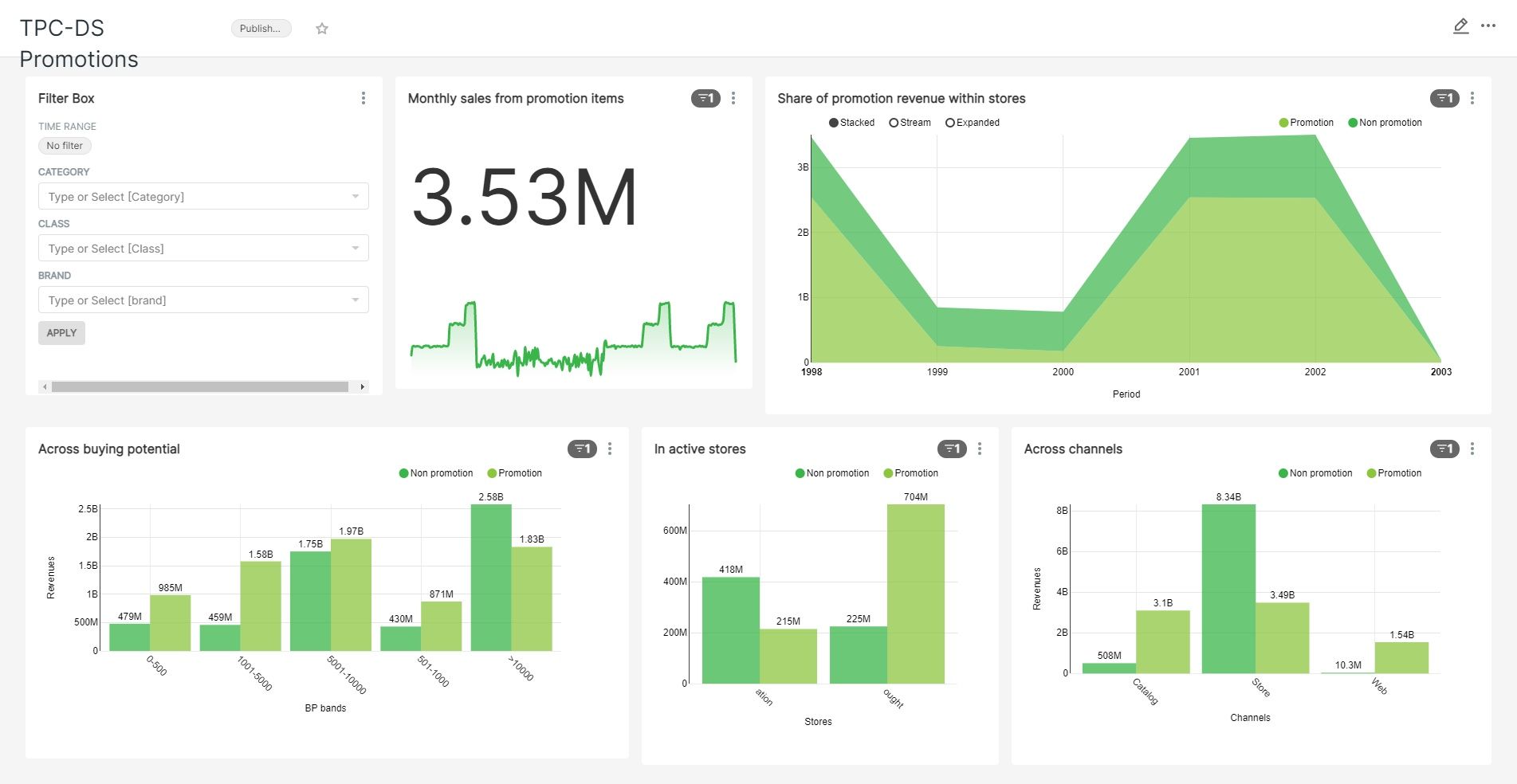

The data mart of promotions followed a star schema approach, where the fact table consisted of approximately 5 million rows and 47 columns. Moreover, we also set up a modern-analytical wide table by flattening the star schema into one joined table to test the performance of Superset.

dbt can be considered as a perfect companion to Snowflake for data transformation:

Our assessment is manifested in various findings.

Let us now evaluate the layers of Superset!

SQL Lab:

Data Exploration:

Dashboarding:

Firstly, according to the official documentation, Superset isn't officially supported on Windows. Thus, Windows users can only try out superset locally via an Ubuntu Desktop VM or using WSL(2). The former likely works, but it wasn't efficient from our standpoint. Even though we iterated through the latter option, unfortunately, we bumped into unknown or known- but-unresolved issues. We eventually managed to initiate Superset locally by starting the DockerHub image step-by-step (instead of docker-compose), but we suggest you avoid installing it on Windows if you can. That being said, we hope that sooner or later, it will be natively supported. It’s also important to highlight as a footnote that we self-hosted the 0.999.0dev version of Superset throughout our testing.

Secondly, it’s advisable to index (or cluster) the source tables (or materialized views) of visualizations, optimize both vertically and horizontally the underlying virtual warehouse in Snowflake as well as performing a micro-partition pruning (or dimensionality reduction). Otherwise, slices and dashboard queries tend to timeout due to concurrency, especially if filters are applied to multiple slices on the dashboard. This can be verified by investigating the execution plan of each chart within Snowflake’s query profile section and checking whether TABLE SCANNING consumes the lion's share of the resources. Since queries can be saved and also tracked back within Superset, we can always reuse previous queries. Note, that our top priority wasn't to benchmark the query performance of Superset within Snowflake, although we gained a general sense of it during our work.

Thirdly, Superset stores the dashboard components (metadata, and slice configurations) within its dedicated database, thus, we decided to manually store and transfer dashboards between instances rather than mounting the database onto a host folder and glue together each particle of a dashboard. Just to refer back to what has been said in Part I., the Superset community is very close to a pull workflow solution where you can play with YAML files of dashboards through the API. To our understanding, Superset supports exporting individual dashboards with a CLI command, but as of now we also feel the urge to also develop a bulk export option.

Let us not quickly summarize what we have learned together!

Commercial BI tools have been ruling the market for years until cost-friendly open source candidates started to show up gradually. Among those, Superset is considered one of the most exciting projects, and it’s certainly worthy of keeping an eye on.

We exposed Superset to a stack of auspicious technologies, notably dbt which is also an important technology Hiflylabs is moving forward with. From our experience, the learning curve is shallower compared to the feature-loaded counterparts, whereas we mustn't skip past the beauty and simplicity of Superset’s visualizations. We are committed to both the dbt and Superset open-source projects and we look forward to extending our client base by offering services on both in the foreseeable future.

As there have been instances for Superset slowly overtaking costly BI tools, we also hope to contribute to the initiative to cut costs and leverage our expertise in supercharging Superset. Even though there are downsides of using Superset due to its recent graduation, we believe that the strong community and the committers behind the project can launch the product into highs matching its potential.

Now that you have seen Apache Superset synthetizing with products such as Snowflake and dbt, what are your impressions on it being the “chosen one” among the free and open-source solutions? Do you see any possibility of Superset establishing its noticeable share in the overheated data visualization market? Let us know in the comment section below!

Author:

Son N. Nguyen - Data Engineer

You can find our other blog post: here.

Explore more stories