Bimodal Approach to GenAI Application Architecture

Dual-speed architecture helps you ship stable apps and iterate on AI fast—one product, two release pipelines, and clear contracts between them. Here’s how.

Overview of AI agents and multi-agent systems, covering architectures, components, and best practices for building effective, transparent, and secure automated workflows.

Welcome to AI Agents! This post provides an overview of agentic behavior and multi-agent structures. We'll also cover approaches to create practical, transparent, and secure automated workflows.

Update (May 2025): From High-Level Concepts to Practical Application

(Editor's Note: This section was added retroactively to guide you to our latest, more in-depth resources on AI agent development.)

When we first published this high-level overview, the world of AI agents was evolving rapidly. Since then, we’ve amassed a year worth of practical experience in building and deploying them. If you're ready to move from concept to execution, we have two fresh resources for you:

- For a look at the specific tools and standards powering modern agentic systems, our follow-up article on Frameworks, Protocols, and Practical Tips for Building AI Agents is the perfect next step.

- See how these frameworks are applied to solve real-world software development challenges in our new whitepaper, "Integrating Reliable AI Agents into the Digital Product Development Lifecycle." Download your copy here to see our coding agent blueprints in action.

At its core, agency means taking initiative—making decisions and actions that have real-world effects. But what does this mean in the context of AI?

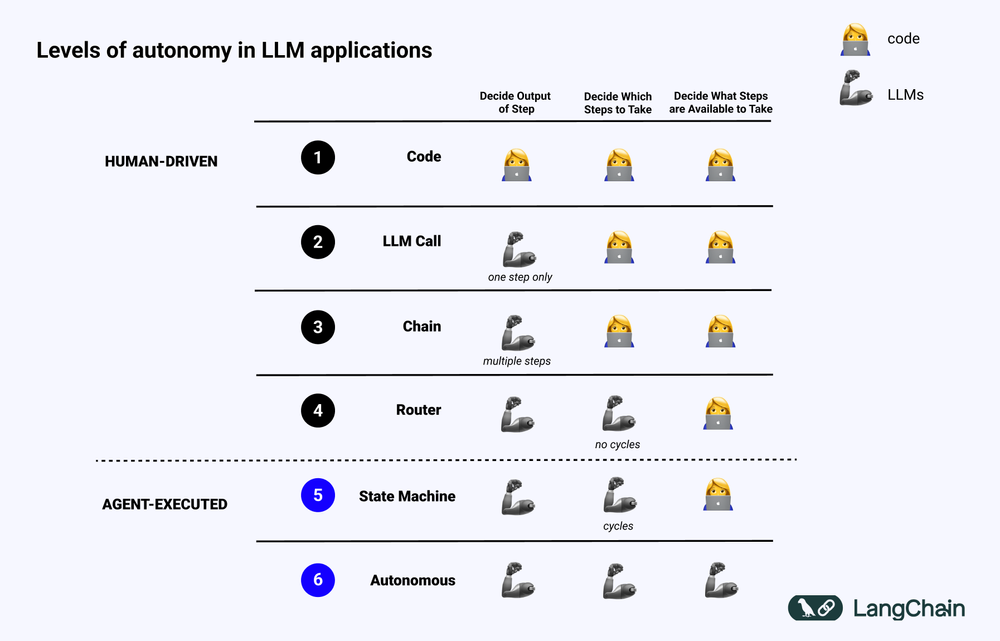

AI agents, and agentic behaviors or workflows, are systems that use AI models to exert some level of control over an application's processes.

Interestingly, when we discuss agents with our clients or other data professionals, we often encounter a wide range of ideas about what the definition should entail. Some are content with existing multimodal LLM capabilities, while others won't settle for anything less than superintelligence.

But here's the thing: from a business standpoint, the technical definition is largely irrelevant. Even the simplest agentic workflow, which consists of only just API calls, can automate and optimize tasks.

That said, our current approach aims a little higher. Let's explore what that looks like.

A multi-agent architecture consists of an ensemble of AI models. These compound systems enable you to automate complex tasks with greater efficiency and accuracy than a single agentic workflow would be capable of.

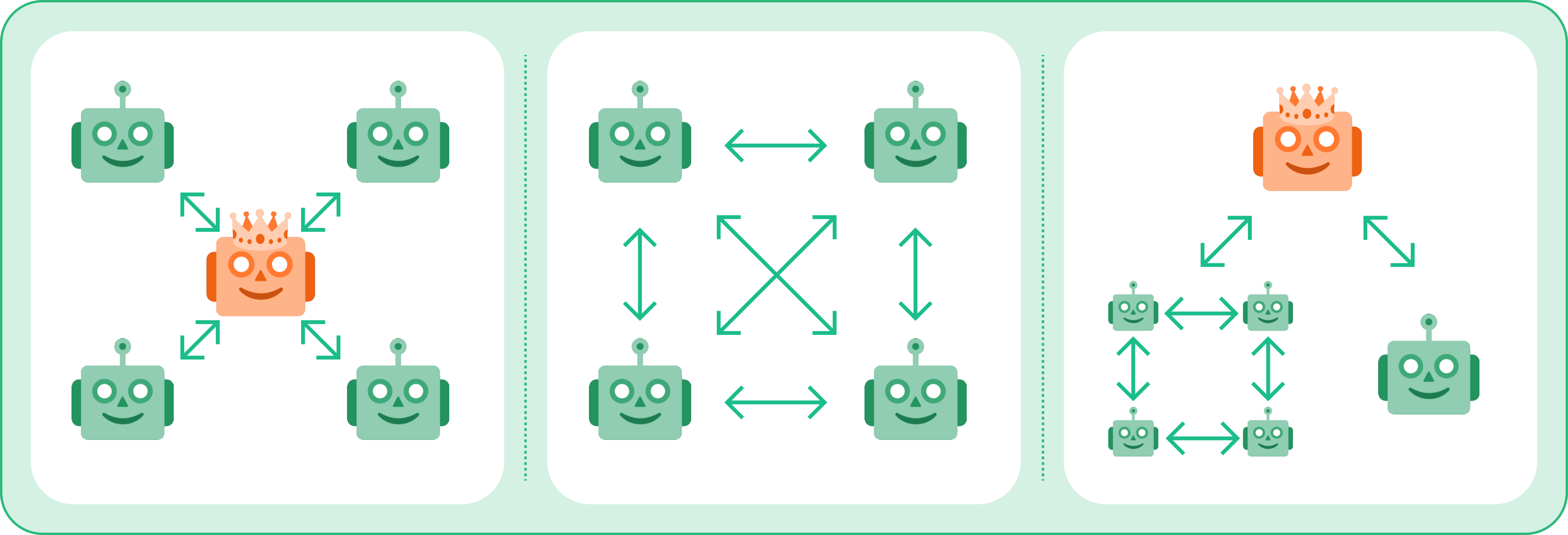

Let’s examine some dimensions with which we can categorize multi-agent systems:

Communication

Hierarchy

Connectivity

Here we have three different multi-agent setups, from left to right:

The above illustration combines concepts from two sources:

These categories are not mutually exclusive with each other—you can mix and match them, creating increasingly complex systems. The list of categories isn’t exhaustive either. You can come up with a myriad of ways of abstractions, and must determine the approach most useful to your project on a case-by-case basis.

Just ensure to tailor your design to your needs, otherwise overcomplication may hinder practical functionality (more on this later).

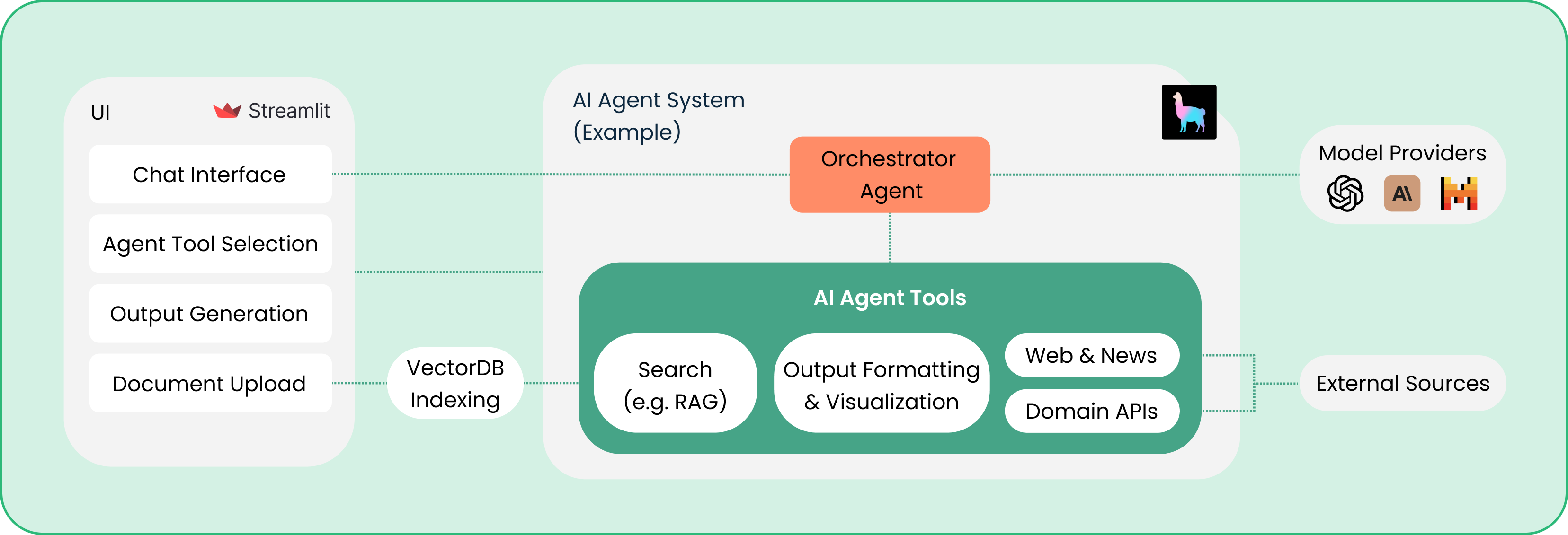

Let’s review typical components of multi-agent systems:

Your main goal should be to build modular components that represent specific skills or tasks, and create a system in which they interact. This way, you can:

But what goes into cooking up a single agent? Let's break it down!

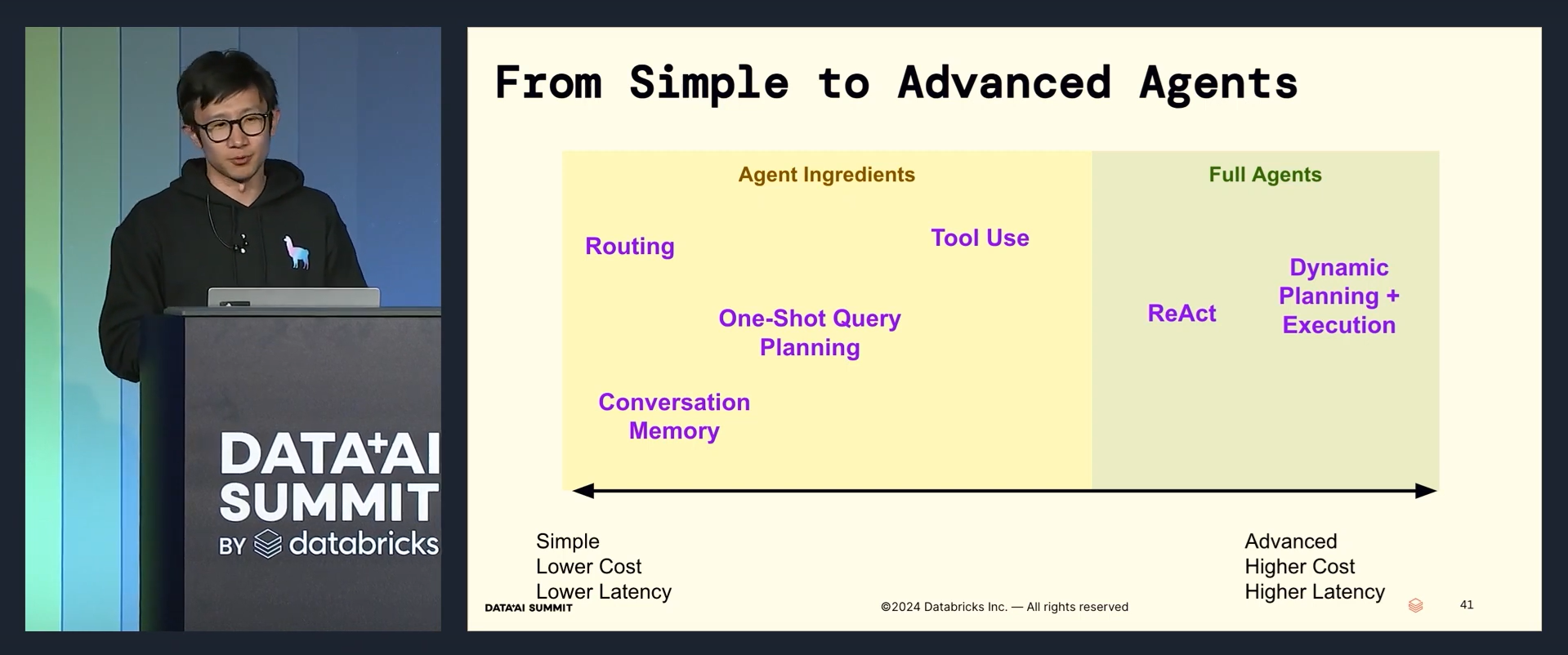

Simple, lower cost, and lower latency agent ingredients:

Advanced, higher cost, higher latency full agents:

A compound multi-agent system has the benefit of combining multiples of these techniques, so that basic building blocks can boost each other’s capabilities cumulatively, or alternatively that less intelligent models can get coaching from higher level models.

Agentic systems more often than not incorporate a flow of information, with data sources to scour, or files to be manipulated.

In all of our projects so far, end-to-end search pipelines were always part of the features list within or adjacent to the agentic solution itself. The web and search features (e.g. RAG, Text-to-SQL) are technically not a must for agents, but as highly requested quality of life additions, we felt we should dedicate a section to their transparency.

We have found that for reliability, clarity, and user trust, it's vital to see which sources the agents have used and how they arrived at their results.

Our systems provide this transparency in several ways within the user interface:

While not as deep a concept as neural mapping interpretability, this approach of open workflow traceability is certainly great from a UX perspective.

Here's a golden rule for successful agents:

Use as little GenAI, and as many deterministic functions as possible.

Why?

These compound systems have an inherent weakness: many more points of failure than simpler setups.

When you can use a simple method – you should! Deterministic algorithms are almost always preferred to non-deterministic generative AI. Some examples:

That's why we use pre-defined tools such as py functions, code executors, chart generators, and so on—fool-proof methods in our architecture. These are essential for sending information between layers, allowing the Orchestrator to route efficiently with well-defined actions.

Building agent-like systems is hard not because of their complexity, but mostly because of current LLM limitations, security risks, and reliability concerns.

Fixing these is a major focus on all of our projects. You can read our previous blog post about the most common LLM safety risks and ways to mitigate them.

As you increase the potential number of calls to LLMs, the probability of failure increases.

Another significant concern is latency: the more data sources, actions, decisions, routes, and models in a system, the longer it takes for the user to get their answer after a query.

How do we address these? Here are some basic steps everyone should follow:

How do our agents make decisions? Each approach has its own strengths:

We'll explore agentic decision making and reasoning frameworks more deeply in a later article. Still, for the purposes of this overview, it’s beneficial to see the general options.

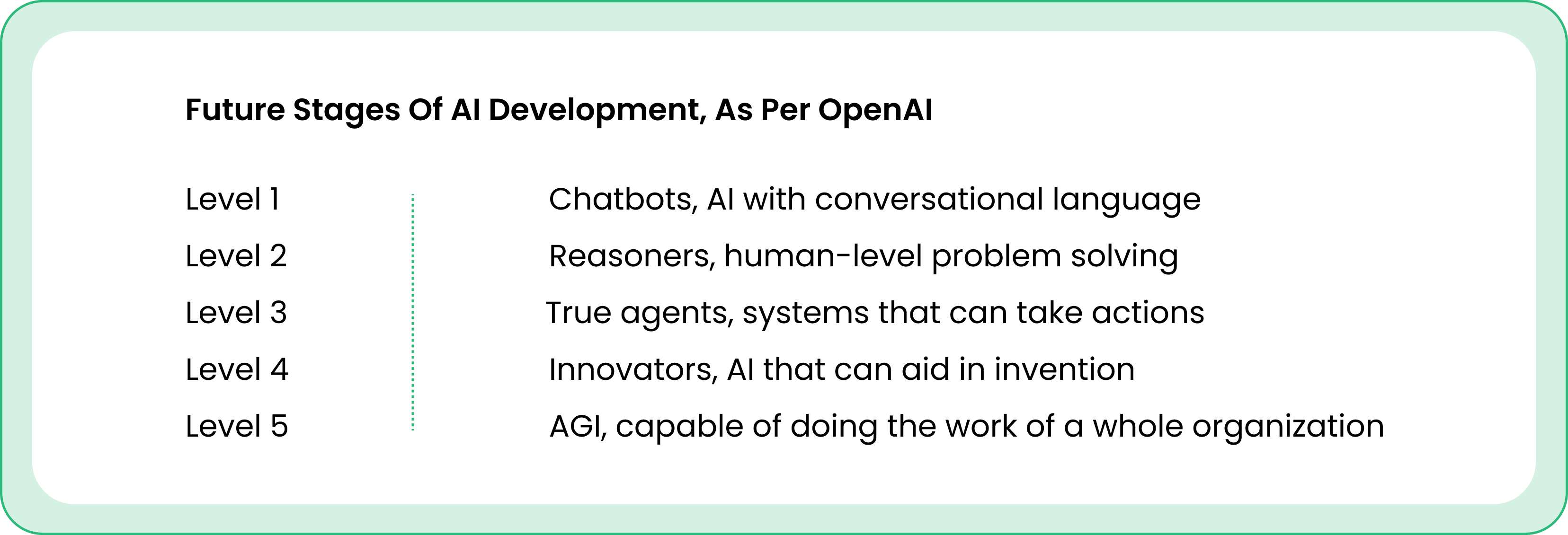

According to recent insights from OpenAI and Google DeepMind, we could be on the cusp of exciting developments in reasoning capabilities:

So when will advanced agents arrive? We predict 2025-2026 at the earliest.

Does this mean our current cutting-edge multi-agent approach will become obsolete? Not necessarily.

The options presented above will become more streamlined, and surely easier to configure. However, we can only expect incremental updates and improvements to the current overall approach until full-blown AGI is here.

Furthermore, organizations relying on open-source, custom-built solutions (due to policy compliance or niche processes) will likely continue to prefer building their own solutions, which will continue to require the setup of a complete ecosystem in which the AI can truly thrive.

The new standard of AI development goes well beyond the scope of data science teams. This more comprehensive approach integrates multiple domains:

We offer comprehensive support throughout the product lifecycle:

Get free architecture blueprints from our latest projects, and boost your AI coding copilots’ reliability to enterprise-grade levels!

Ready to take your business to the next level? AI agents offer:

Explore more stories