The Joy of Thinking

Hiflylabs is supporting Flying School, a Math development program for ninth-grade students in spring 2024.

CODE

|HIFLYLABS|

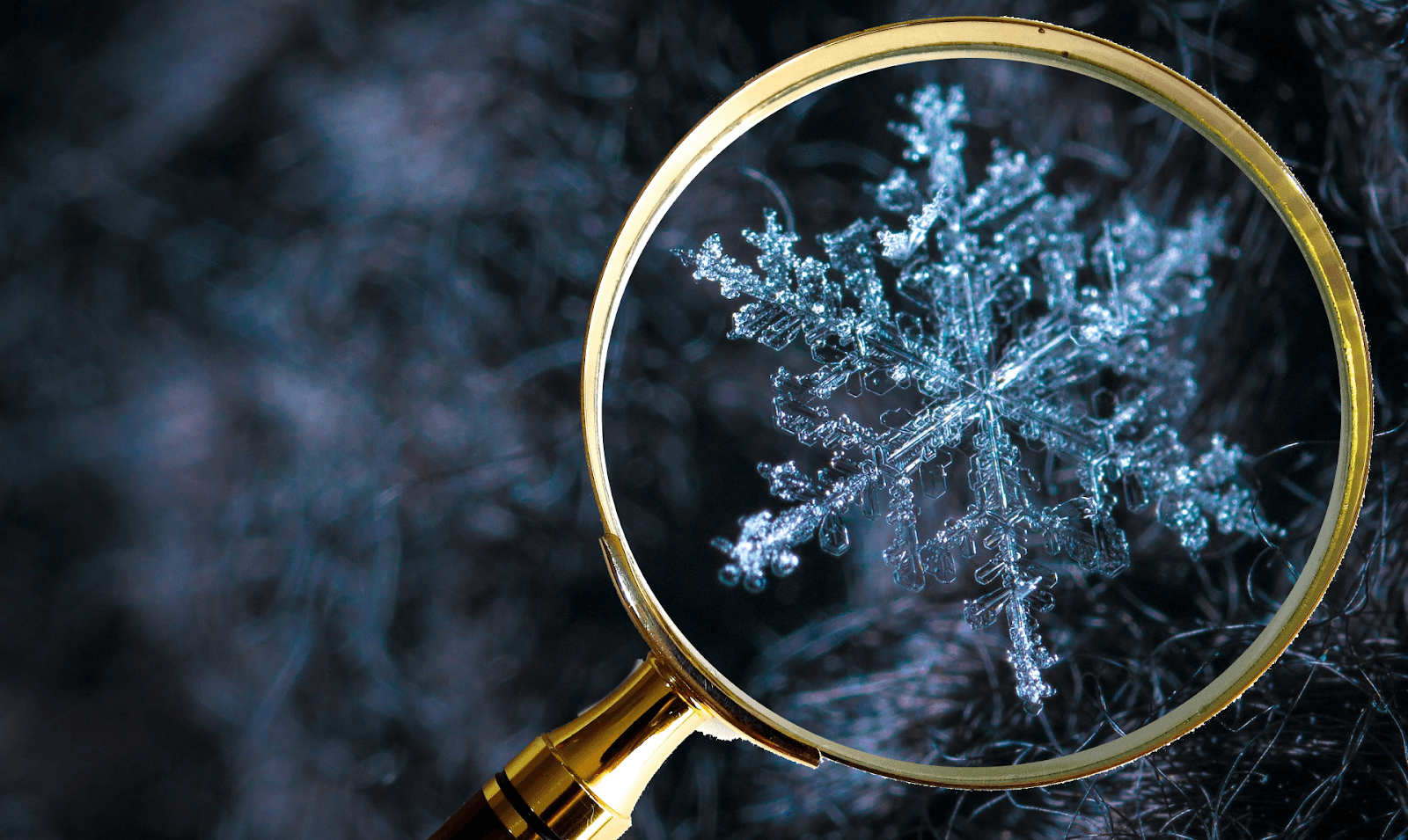

In the first post of the sequel (pun intended 🙂) I touched on how and why Snowflake costs naturally tend to spiral up over time in the life of a data warehouse.

I also stressed the importance of designing, implementing and nurturing a measurement infrastructure so as to have visibility into the cost drivers. It is the foundation that helps detect if there is an issue, select optimization targets, and check if corrective actions have, indeed, been effective.

This time, I move on first to techniques to pinpoint offenders, and then to discuss a few costly patterns and give some advice as to how to avoid them.

If something worthy of investigation pops up in monitoring or you just notice some long-running query, the next logical step is to look into it.

Snowflake has a few useful built-in features to this end. First of all, it saves all your queries, along with their meta-data such as run time and the query plan. Also, queries have unique IDs so that it’s possible to reference them, even directly via the URL. (Note, however, that data is available only for a while after the query has been run.) Snowflake exposes these in its UI.

Snowflake has a decent guide on Analyzing Queries Using Query Profile, so let me share only some of the key points to look for.

Sometimes, there are two steps in the plan, for example when the query creates a table or a view, such as in most dbt-generated models. In these cases, usually the last step, the query itself is the more interesting one.

Obviously you would want to look at the blocks in the query plan with the largest portion of the total run time first. It’s also worth noting how thick the arrows are between the blocks: these represent the amount of data that is moved from step to step. Cutting back on the amount of data that gets moved around is often key in reducing total execution time.

When investigating, keep a close eye on the “spill” data, for spill is what kills performance in the vast majority of cases. Spilling to local storage is not a good sign, but you can usually get away with it. Spilling to remote storage, however, is to be avoided. Do you see a lot of data spilling to remote storage? There you have your performance bottleneck.

Another gauge worth looking at is “Pruning”: what percent of the total micro-partitions did Snowflake have to scan to satisfy the query. The lower the ratio, the faster it gets.

In general, and at a very high level, there are two directions you can take: either assign more resources or modify the query.

You can increase the warehouse size. Somewhat counter-intuitively a larger warehouse can be cheaper: it is more expensive per minute, but runs for fewer minutes. As larger warehouses have larger RAM, they may be able to hold enough data in memory to avoid spilling, and, therefore, they can dramatically reduce run time and thus costs in some cases.

Note, however, that this is only a temporary measure if you expect your data to grow, as it will outgrow the larger warehouse as well. It is a quick band-aid, though, that can buy you some slack to implement more fundamental changes.

Or you can refactor the query. And, honestly, most often this is the right long-term approach. However, it can be time-consuming and complex, and it can have far reaching consequences. There is more to it than I can squeeze into this blogpost but generally you can:

There can be a lot of reasons why a certain query (or a certain type of query) is slow. Some of these may be inherent to the business problem, others may be related to the data model, some may reflect the competency limits of their creators, and so on. Sometimes, though, the bottleneck is in the intricacies of the tools.

Having used Snowflake on various projects, we have stumbled upon situations where Snowflake’s internals, sometimes amplified by dbt best practices, led to performance issues. I collected some of these below — but obviously there are several others as well.

One of the first major bummers we ran into with Snowflake was related to using window functions. My colleague Peter Apati has written about it in more detail in his blog post.

The TL;DR takeaway is that you should avoid large partitions in Snowflake’s window functions, such as when you have too many NULLs in a partitioning key column.

With this one optimization, we could shave a whopping 6.5 hours off of a daily job with about two days’ worth of work — from starting to investigate the problem to merging the fix into the master branch. (That’s a payback period of some 5 days: not bad at all.)

At one point we were unsure how to optimize a case where a huge number of complex columns were calculated for a dashboard view. We suspected that users only ever used a small subset of the columns. (This was, obviously, not an optimal situation: the rest shouldn’t have been created in the first place — but such is life: sometimes it is easier to solve technical problems than political ones…)

We were sure that Snowflake, being a column-oriented database, only calculates the columns in views that are actually used in the query. So, we thought, a small subset of columns will be generated on-the-fly when users request them via the dashboard. To our surprise, Snowflake support proved us wrong: we learned from them that Snowflake generates the whole view, with all columns, and then “drops” the unused ones.

It means that you do not want to have a very wide view with many complex-to-calculate columns, and then use only some of them (e.g. at various points in the pipeline). Instead, either have more, narrower views, or persist the generated wide view as a table.

On the other hand, oftentimes one only does very light transformation on a table, for example renaming columns, formatting a datetime, or casting types.

In these cases it makes a lot of difference not to persist the table but set it up as a view. Large tables can take a surprising amount of time to be written to disk. There may be cases when them being tables helps the Snowflake query engine to optimize execution, but these kinds of transformations usually don’t.

Thus, you can save Snowflake a lot of writing and yourself a lot of time by being thoughtful in your choice of persistence when you do not substantially transform your input.

We rely on dbt best practices by default. Honestly, until we started performance optimizations, we had not even been contemplating them too much.

However, we found that some of them are not really performance-friendly. Note that it does not mean that they are wrong; performance is only one aspect of data engineering, and in many cases it may not even be the most important one. But, in some specific situations deviating from them definitely improves performance.

Perhaps the first convention one learns when picking up dbt is to have a “SELECT *” CTE for each `ref` on top of the model definition. It is a good one in that it makes code more readable, by having all external references in a model collected in one place. Also, a star SELECT makes all columns of the referenced model available without a second thought.

While convenient, it makes tracing column lineage a lot harder for reviewers and when making changes later. (A convenient column-level data lineage tool could be handy here, for sure, but let’s face it: most often we just scroll up to the top of the file…)

These “all column” selects also tend to propagate to the final result, on the “who knows what it may be good for” basis. This is especially prevalent in intermediate models.

It means, though, that when the final model is wide and tall, many columns for all rows need to be read and written. Thus, you’ll pay a performance (and thus dollar) penalty for this laissez faire approach. And you pay it on every run, in production as well as in all other environments.

Therefore, we promote explicit column references in both the import section and in the final select of the model as well.

dbt’s standard approach is to build a model’s code via a “chain” of CTEs. It is similar to the way software developers build up a piece of larger functionality from modules: they are composed of smaller functions, each of which implements a certain conceptionally separate component.

CTEs are awesome. For example, they make code more understandable and testable. Moreover, it is often a lot easier to build up the transformation gradually, in piecewise CTEs, than in one “big bang” SELECT. And they also create data blocks that are defined only once but that can be referenced multiple times within the same model. (And there are even constructs that can only be built with CTEs — though they are arguably only rarely used.)

CTEs, however, have drawbacks as well, especially on Snowflake when performance is a concern.

First of all, CTEs are in-memory constructs; and thus any CTE that does not fit in the physical memory will be painfully slow. We have seen several-fold (!) speedups by replacing a CTE with a direct table reference at its invocation. (I.e. by copying the CTE’s SELECT part into the statement where the CTE was used.) The savings from such a simple change are non-trivial when a model’s run completes in say 15 minutes instead of an hour.

This, however, hinders readability, and sometimes even violates the “DRY” (Don’t Repeat Yourself) principle, so good judgment is required in deciding when to use this construct. (Remember not to speed-optimize before you know if you have a bottleneck at all and where it is.)

A different compromise that’s often useful is to factor out a CTE into its own model and persist as a table. Then, this “prep” model can be referenced in the larger transformation as any other model.

Pure dbt generally recommends against such constructs, and it also generates a lot of I/O (in that the table has to be written to disk). On the other hand, it allows Snowflake to micro-partition the table, and also it can be processed from disk (i.e. without spilling when large). (It is well known that currently CTEs are not partitioned or indexed in Snowflake.)

Again, we advise that you carefully weigh the pros and cons of an intermediate table when looking for performance gains. However, when chosen thoughtfully, they can be huge boosts to the pipeline’s performance.

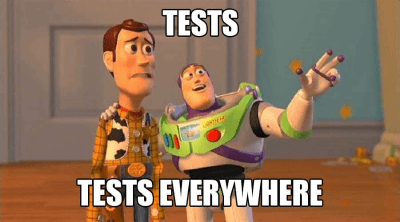

One of dbt’s favorite features is the built-in testing. We love it, for a lot of reasons, but mostly because it helps ensure that we do not mess something up in development. Tests also guard data quality in production, especially at the ingestion boundary, should some external data source breach the data contract. Thus, we use them a lot.

Snowflake does not support column constraints. (OK: technically it “only” does not enforce them, which for all practical purposes is the same thing.) Thus, we use the standard tests (unique, not_null) as a matter of course. We tend to define foreign key tests as well. Sometimes we also define tests that check if all input rows have their corresponding pair in the output.

These are all useful.

Realize, however, that they are not free, not by a significant margin. Most of these tests take full table scans. Each one of them. Separately.

That is if you have a couple of them on each of your large tables (e.g. a web events table or a larger order items table) they can be costly. If you repeat them at every layer (to make sure that transformations don’t mess up important table invariants), you pay for them at every layer. And you pay for them in every run. You pay for most of them for the whole table even if you load the table incrementally. (E.g. there is no way to check a unique constraint without checking “old” and “new” values together.)

They can take so much execution time that they may be worth optimizing.

Each one is usually short but there can be many of them. In one of our projects, not a real large one, mind you, the 450 tests ran for about 10 minutes on a fair sized Snowflake warehouse. The not_null ones are usually around 1–2 seconds, so not really a big deal. The unique and the more complex ones, however, are in the minutes on large tables. They are completed in 10 minutes only because the warehouse can run several in parallel.

One of the first considerations we recommend is to review the WARN tests. Their output — more often than not — is never checked in our experience as they don’t fail the production runs. If you, indeed, don’t mind that they are failing, you can turn them off as well. (We suggest rigorous root cause analysis before turning them off, though.)

Another possible optimization technique is to cut back on uniqueness tests (perhaps only check on the input side) and use some other technique to make sure that values don’t get messed up in the pipeline. (Checksums and counts, for example, are much faster than uniques: if the sum on the output side is equal to that on the input side, it’s more than likely that they’re OK. Especially if their count is also the same.)

We truly love both Snowflake and dbt. They are highly performant tools that have elevated the efficiency of both the data practitioners and the data pipelines tremendously. We can create models much faster with dbt than we used to, and run them much faster on cloud warehouses than before.

On the other hand, the more powerful tools let us become less focused on the performance aspect of our work in many cases. But, business demands are ever higher: more and more input data is thrown at the data pipelines and they are expected to get processed faster and into many more kinds of output. While the better infrastructure has helped us keep up with this pressure, it is time that we also pay more attention to the performance aspect of our work.

Being performance-conscious naturally results in decreased resource consumption, which, in turn, is immediately reflected in a reduced Snowflake bill. Therefore Snowflake performance optimization has direct financial implications and most often has a positive ROI.

Hiflylabs is supporting Flying School, a Math development program for ninth-grade students in spring 2024.

Fine-tuning is all about using genAI to fit your own context and goals. Explore our approach for both everyday and business settings. Open-source model Mistral has hardly seen any Hungarian in its training. Yet it learned to speak fluently from only 80k messages!

At Hiflylabs, we don't just talk about talent; we cultivate it through sponsorships. KöMaL's dedication to nurturing future brilliance inspires us all.

Hiflylabs is your partner in building your future. Share your ideas and let's work together!