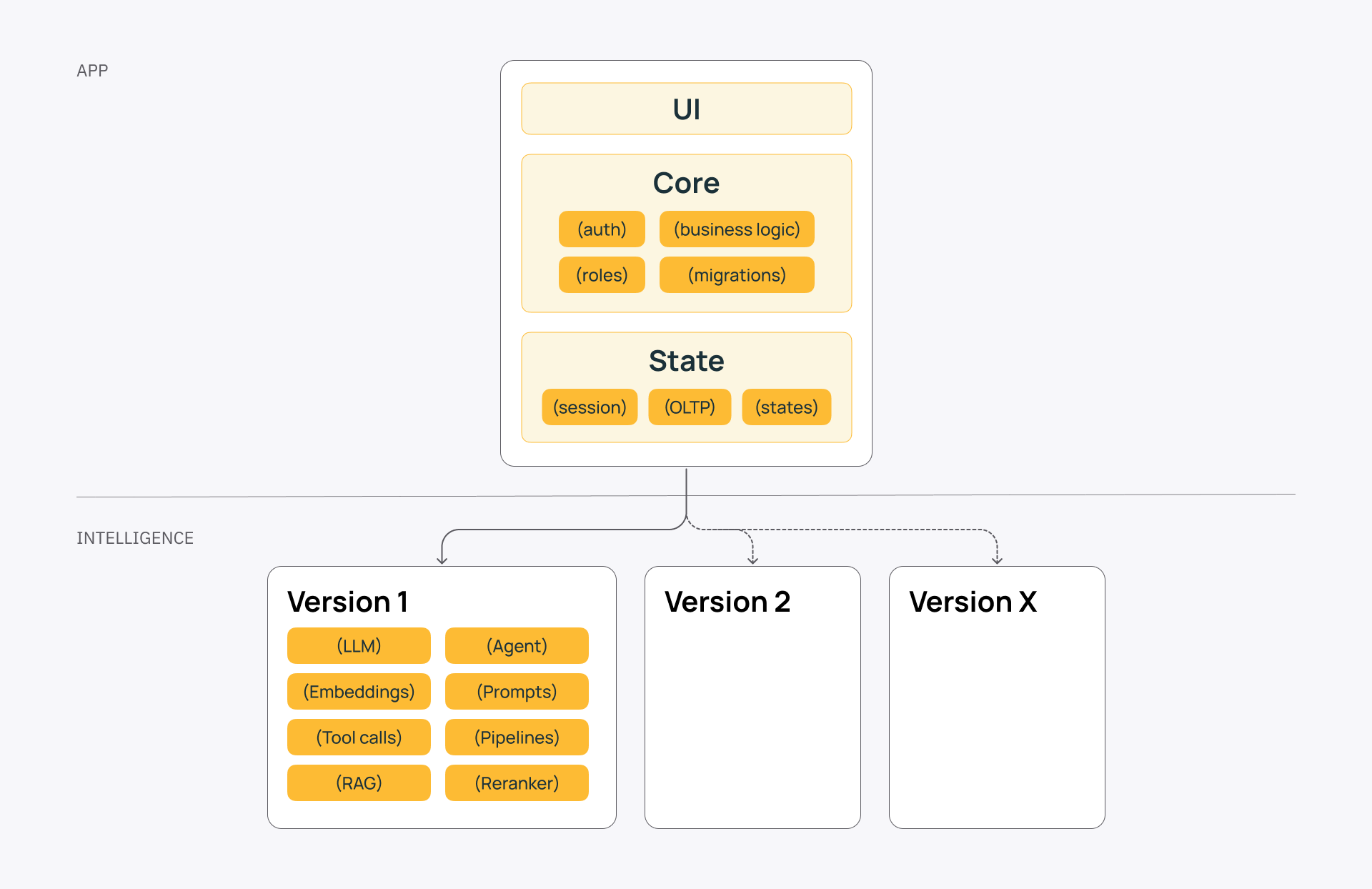

When you're building a GenAI application, you have to account for two speeds: data scientists iterating daily on AI improvements while software engineers ship stable releases with careful planning.

A bimodal approach to AI app architecture lets both teams work at their natural pace—without slowing down or breaking things. And Databricks makes it remarkably easy to implement within one unified platform.

In this post, I’m showing how dual-speed architecture works and helps teams iterate on AI fast while keeping releases stable. For a detailed playbook, check the full guide on Medium.

Problem: apps and intelligence ship at different speeds

You can't ship AI features at the same speed you ship software.

The intelligence layer changes constantly—prompts get refined, models get swapped, retrieval strategies evolve, rankers improve. Your data scientists run A/B tests and iterate based on feedback. By the nature of GenAI, nothing is 100% deterministic.

Meanwhile, your application layer has to be stable, deterministic, and carefully released. You have scalability requirements, permissions, workflows, audit trails, migrations, and deterministic business rules that can't break.

If you try pushing both through one release pipeline, you create an impossible tradeoff.

What NOT to do to solve this problem

Three common approaches that fail to solve the problem:

Treat AI like traditional software

This slows everything down. Every prompt tweak goes through code review, testing, staging, and a scheduled release. Your data scientists can't iterate fast. Your AI improvements take weeks to ship. You lose competitive advantage.

Let the AI team commit directly to main

This makes everything unstable. You gain speed but break things. Your application becomes unpredictable. Users hit errors you can't reproduce. Your software team burns out.

Build separate systems

Your AI team builds its own stack outside your application. Now you have two codebases, two auth systems, and a complex integration layer—more things can go wrong.

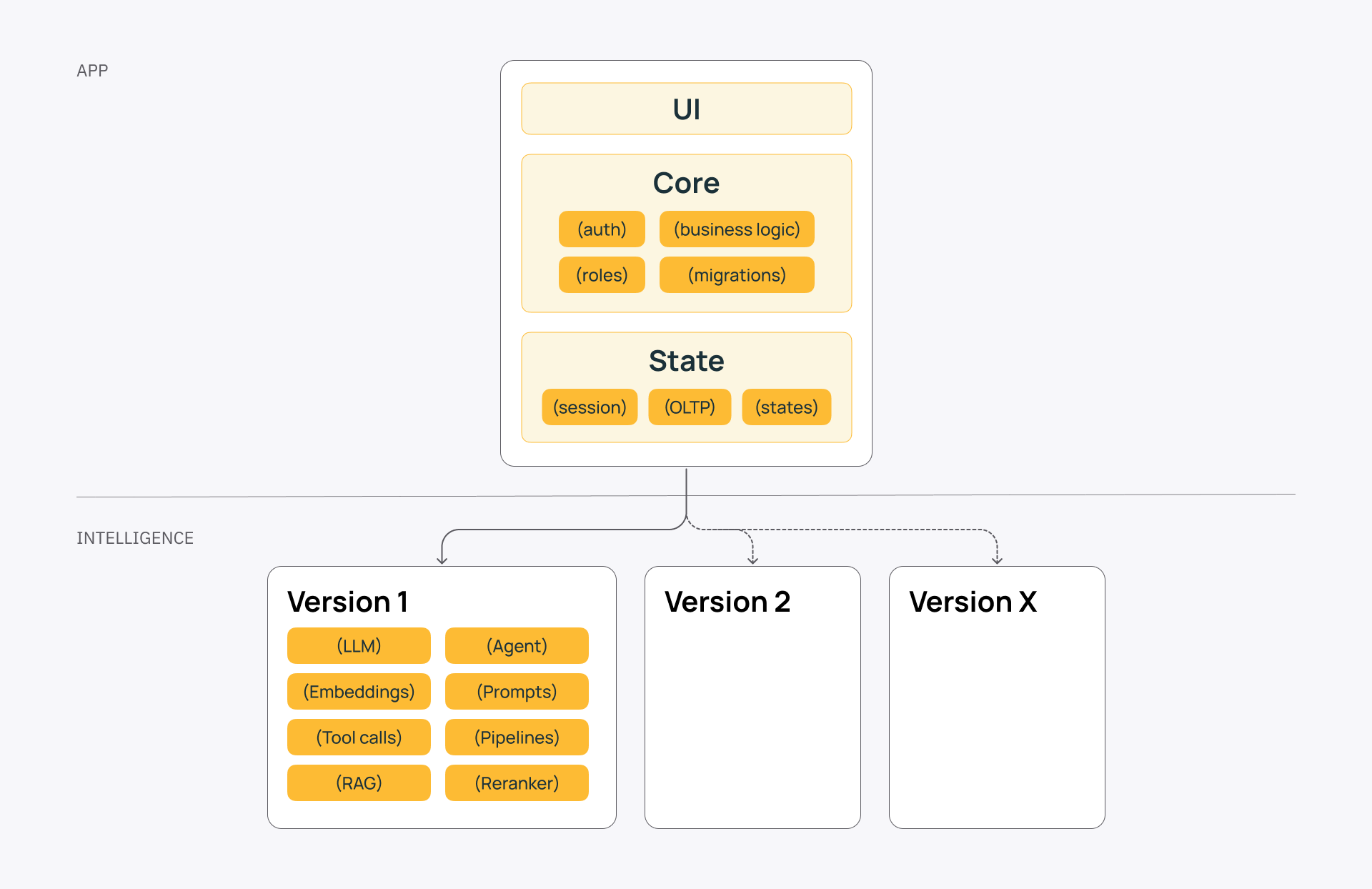

Solution: dual-speed GenAI app architecture with clear contracts

Your GenAI app needs two release pipelines, not one. Two lanes, one product, clear contracts between them.

Application layer

Optimize the app layer for correctness, scalability, migrations, clean architecture, and reusable components. This is where established engineering principles matter, with planned releases, backward compatibility, and tight security by default.

Intelligence layer

Optimize the intelligence layer for rapid iteration—prompts, model routing, retrieval strategies, rankers, heuristics, and A/B tests. Frequent changes as you learn what "good" looks like. Controlled experiments that can be reversed.

The dual-speed architecture approach succeeds or fails at the contract boundary.

This is where you define typed inputs and outputs, allowed side effects, latency expectations, failure modes, versioning rules, and observability hooks.

Three options for implementing the contract

API contract

API contract gives you the cleanest isolation. The intelligence layer becomes a service your app calls, and you can replace or upgrade it without rewriting your app.

Independent deployability, strong tooling, clear boundaries. It gives more operational overhead, but usually is the best option by default.

Database contract

Database schema as contract works well for pre-generated reports, “AI-prepared” datasets consumed by the app, and offline workflows. The idea is simple: the intelligence layer reads from (or writes to) the same database schema as the app. However, it creates coupling risks around schema changes and added migration coordination.

Shared library

Shared library packages AI toolkits both layers can use. It’s portable and versionable, but leads to lockstep releases and forces the app to rebuild and release as frequently as intelligence does, undermining the idea of a dual speed.

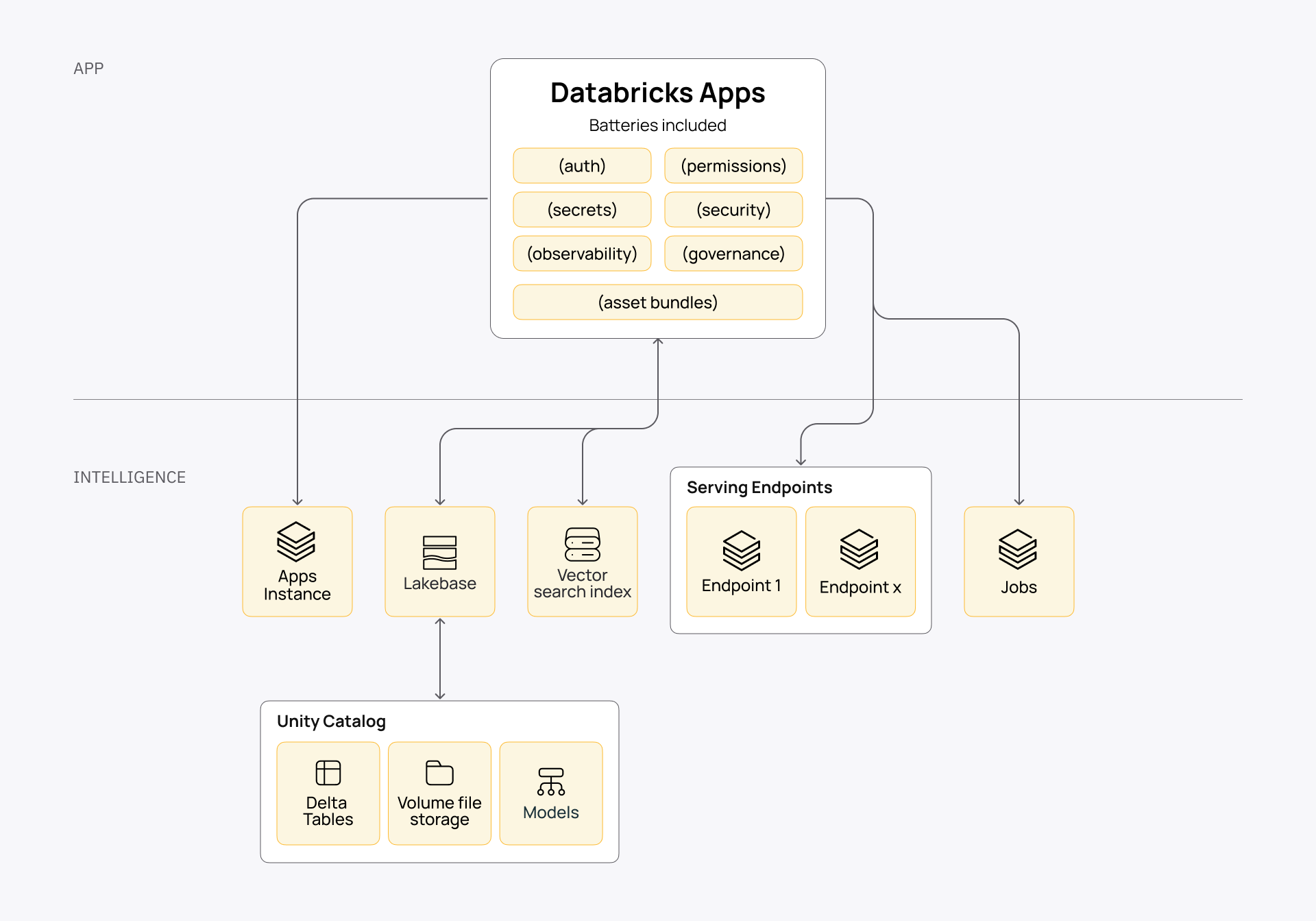

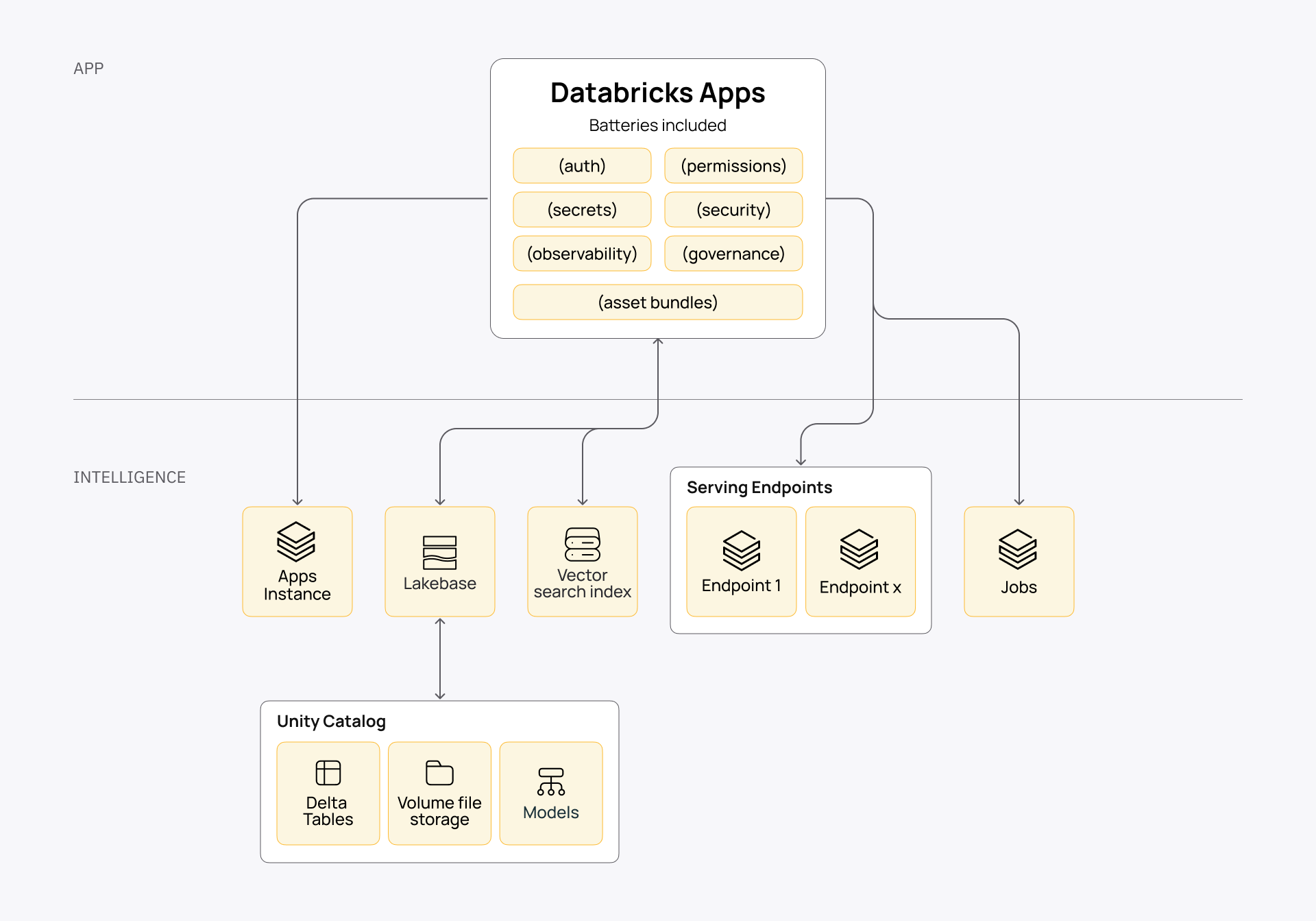

How Databricks makes this architecture natural

If you're building your application on Databricks, you can map the platform to this pattern remarkably well. Databricks services and components remove most of the operational friction.

- Databricks Apps gives you a unified environment where you can spin up multiple app instances without managing separate service stacks. You can run your stable app layer and lightweight intelligence-facing services in a microservices-like setup, with auth, governance, and observability built in from day one.

- Lakebase bridges the analytics and transactional data. It keeps your data, AI, and analytics in the lakehouse ecosystem while syncing to a transactional Postgres system for application state and OLTP needs. This is especially powerful for the database-as-contract approach—you get both worlds without the usual operational complexity.

- For the intelligence layer specifically, you can use Lakeflow Jobs for long-running workflows (ingestion, parsing, embedding pipelines), Model Serving for hyper-scalable AI endpoints, and AI Gateway for model routing and observability. Everything traces back through MLflow, so you get full lineage without building custom tooling.

- Databricks offers ready-made intelligence components: AI/BI Genie for natural-language data access, Agent Bricks for prebuilt agent patterns, and AI Functions to apply AI directly in your data workflows.

- You work inside a unified, governed environment. Unity Catalog handles permissions and data access across both layers. You don’t have to put together five different systems with custom connectors and individual auth and security setups.

If you want to implement this architecture approach on your GenAI application, check a detailed playbook covering the pros and cons of different contract options, practical layer comparisons, and the added advantage of running everything within the Databricks Data Intelligence Platform.